Recently I assisted a small business (of around 10 users) with a transition from Small Business Server 2003 to Server Essentials R2.

Small Business Server 2003 had served it well for nearly 10 years. The package includes Windows Server 2003 (based on XP), Exchange, and the rather good firewall and proxy server ISA Server 2004 (the first release had ISA 2000, but you could upgrade).

SBS 2003 actually still does more than enough for this particular business, but it is heading for end of support, and there are some annoyances like Outlook 2013 not working with Exchange 2003. This last problem had already been solved, in this case, by a migration to Office 365 for email. No problem then: simply migrate SBS 2003 to the latest Server 2012 Essentials R2 and everything can continue running sweetly, I thought.

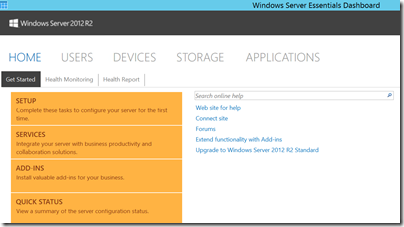

Sever Essentials is an edition designed for up to 25 users / 50 devices and is rather a bargain, since it is cheap and no CALs are required. In the R2 version matters are confused by the existence of a Server Essentials role which lets you install the simplified Essentials dashboard in any edition of Windows Server 2012. The advantage is that you can add as many users as you like; the snag is that you then need CALs in the normal way, so it is substantially more expensive.

Despite the move to Office 365, an on-premise server is still useful in many cases, for example for assigning permissions to network shares. This is also the primary reason for migrating Active Directory, rather than simply dumping the old server and recreating all the users.

The task then was to install Server Essentials 2012 R2, migrate Active Directory to the new server, and remove the old server. An all-Microsoft scenario using products designed for this kind of set-up, should be easy right?

Well, the documentation starts here. The section in TechNet covers both Server 2012 Essentials and the R2 edition, though if you drill down, some of the individual articles apply to one or the other. If you click the post promisingly entitled Migrate from Windows SBS 2003, you notice that it does not list Essentials R2 in the “applies to” list, only the first version, and there is no equivalent for R2.

Hmm, but is it similar? It turns out, not very. The original Server 2012 Essentials has a migration mode and a Migration Preparation Tool which you run on the old server (it seems to run adprep judging by the description, which updates Active Directory in preparation for migration). There is no migration tool nor migration mode in Server 2012 Essentials R2.

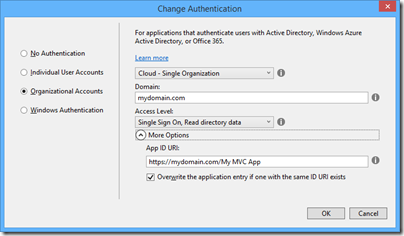

So which document does apply? The closest I could find was a general section on Migrate from Previous Versions to Windows Server 2012 R2 Essentials. This says to install Server 2012 Essentials R2 as a replica domain controller. How do you do that?

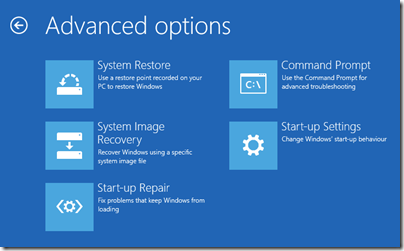

To install Windows Essentials as a replica Windows Server 2012 R2 domain controller in an existing domain as global catalog, follow instructions in Install a Replica Windows Server 2012 Domain Controller in an Existing Domain (Level 200).

Note the “Level 200” sneaked in there! The article in question is a general technical article for Server 2012 (though in this case equally applicable to R2) aimed at large organisations and full of information that is irrelevant to a tiny 10-user setup, as well as being technically more demanding that you would expect for a small business setup.

Fortunately I know my way around Active Directory to some extent, so I proceeded. Note you have to install the Active Directory role before you can run the relevant PowerShell cmdlets. Of course it did not work though. I got an error message “Unable to perform Exchange Schema Conflict Check.”

This message appears to relate to Exchange, but I think this is incidental. It just happens to be the first check that does not work. I think it was a WMI (Windows Management Instrumentation) issue, I did not realise this at first though.

I should mention that although the earlier paper on migrating to Server Essentials 2012 is obsolete, it is the only official documentation that describes some of the things you need to do on the source server before you migrate. These include changing the configuration of the internet connection to bypass ISA Server (single network card configuration), which you do by running the Internet Connection Wizard. You should also check that Active Directory is in good health with dcdiag.exe.

I now did some further work. I removed ISA Server completely, and removed Exchange completely (note you need your SBS 2003 install CD for this). Removing ISA broke the Windows Server 2003 built-in firewall but I decided not worry about it. Following a tip I found, I also used ntdsutil to change the DSRM (Directory Services Recovery Mode) password. I also upgraded the SBS AD forest to Server 2003 (it was on Server 2000), which is necessary for migration to work.

I am not sure which step did the trick, but eventually I persuaded the PowerShell for creating the Replica Domain Controller to work. Then I was able to transfer the FSMO roles. I was relieved; I gather from reading around that some have abandoned the attempt to go from AD in Server 2003 to AD in Server 2012, and used an intermediate Server 2008 step as a workaround – more hassle.

After that things went relatively smoothly, but not without annoyances. There are a couple to mention. One is that after migrating the server, you are meant to connect the client computers by visiting a special URL on the server:

Browse to http://destination-servername/connect and install the Windows Server Connector software as if this was a new computer. The installation process is the same for domain-joined or non-domain-joined client computers.

If you do that from a client computer that was previously joined to the SBS domain (having removed unwanted stuff like the SBS 2003 client and ISA client) then you are prompted to download and run a utility to join the new network. You do that, and it says you cannot proceed because a computer of the same name already exists. But this is that same computer! No matter, the wizard will not run, though the computer is in fact already joined to the domain.

If you want to run the connect wizard and set up the Essentials features like client computer backup and anywhere access, then as far as I can tell this is the official way:

- Make sure you have an admin user and password for the PC itself (not a domain user).

- Demote the computer from the domain and join it to a workgroup. Make sure the computer is fully removed from the domain.

- Then go to the connect URL and join it back.

If you are lucky, the domain user profile will magically reappear with all the old desktop icons, My Documents and so on. If you are unlucky you may need manual steps to recover it, or to use profile migration tools.

This is just lazy on Microsoft’s part. It has not bothered to create a tool that will do what is necessary to migrate an existing client computer into the Server Essentials experience (unless such a tool exists and I did not find it; I have seen reports of regedit hacks).

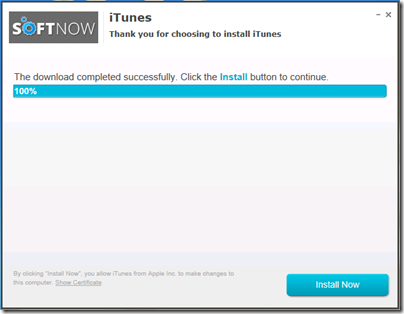

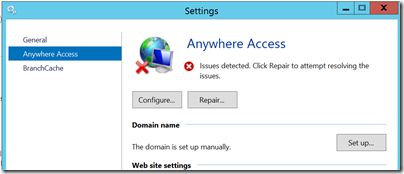

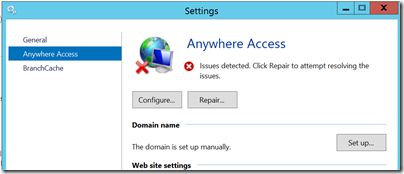

The second annoyance was with the Anywhere Access wizard. This is for enabling users to log in over the internet and access limited server features, and connect to their client desktop. I ran the wizard, installed a valid certificate, used a valid DNS name, manually opened port 443 on the external firewall, but still got verification errors.

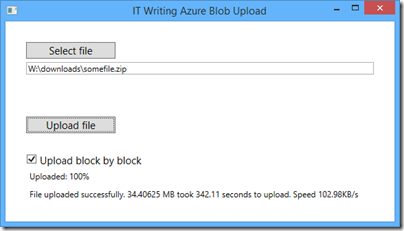

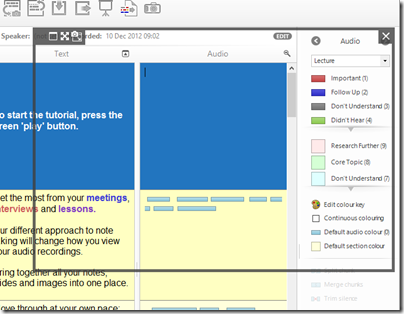

Clicking Repair is no help. However, Anywhere Access works fine. I captured this screenshot from a remote session:

All of the above is normal business for Microsoft partners, but does illustrate why small businesses that take on this kind of task without partner assistance may well run into difficulties.

Looking at the sloppy documentation and missing pieces I do get the impression that Microsoft cares little about the numerous small businesses trundling away on old versions of SBS, but which now need to migrate. Why should it, one might observe, considering how little it charges for SBS 2012 Essentials? It is a fair point; but I would argue that looking after the small guys pays off, since some grow into big businesses, and even those that do not form a large business sector in aggregate. Google Apps, one suspects, is easier.

An underlying issue, as ever with SBS, is that Windows Server and in particular Active Directory is designed for large scale setups, and while SBS attempts to disguise the complexity, it is all there underneath and cannot always be ignored.

In mitigation, I have to say that for businesses like the one described above SBS has done a solid job with relatively little attention over many years, which is why it is worth some pain in installation.

Update: A couple of further observations and tips.

Concerning remote access, I suspect the wizard wants to see port 80 open and directed to the server. However this is not necessary as far as I can tell. It is also worth noting that SBS Essentials R2 installs TS Gateway, which means you can configure RDP direct to the server desktop (rather than to the limited dashboard you get via the Anywhere Access site).

The documentation, such as it is, suggests that you use the router for DHCP. Personally I prefer to have this on the server, and it also saves time and avoids errors since you can import the DHCP configuration to the new server.