Yesterday Microsoft announced Windows Nano Server which is essentially an installation option that is even more stripped-down than Server Core. Server Core, introduced with Windows Server 2008, removed the GUI in order to make the OS lighter weight and more secure. It is particularly suitable for installations that do nothing more than run Hyper-V to host VMs. You want your Hyper-V host to be rock-solid and removing unnecessary clutter makes sense.

There was more to the strategy than that though, and it was at last week’s ChefConf in Santa Clara (attended by both Windows Server architect Jeffrey Snover and Azure CTO Mark Russinovich) that the pieces fell into place for me. Here are two key areas which Snover has worked on over the last 16 years or so (he joined Microsoft in 1999):

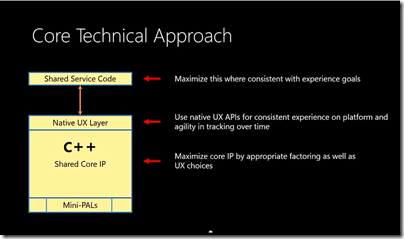

- PowerShell, first announced as “Monad” in August 2002 and presented at the PDC conference in September 2003. Originally presented as a scripting platform, it is now described as an “automation engine”, though it is still pretty good for scripting.

- Windows Server componentisation, that is, the ability to configure Windows Server by adding and removing components. Server Core was a sign of progress here, especially in the Server 2012 version where you can move seamlessly between Core and full Windows Server by adding or removing the various pieces. It is still not perfect, mainly because of dependencies that make you drag in more than you might really want when enabling a specific feature.

- PowerShell Desired State Configuration, introduced in Server 2012 R2, which puts these together by letting you define the state of a server in a declarative configuration file and apply it to an OS instance.

I am not sure how much of this strategy was in Snover’s mind when he came up with PowerShell, but today it looks far-sighted. The role of a server OS has changed since Windows first entered this market, with Windows NT in 1993. Today, when most server instances are virtual, the focus is on efficiency (making maximum use of the hardware) and agility (quick configuration and on-demand scaling). How is that achieved? Two things:

1. For efficiency, you want an OS that runs only what is necessary to run the applications it is hosting, and on the hypervisor side, the ability to load the right number of VMs to make maximum use of the hardware.

2. For agility, you want fully automated server deployment and configuration. We take this for granted in cloud platforms such as Amazon Web Services and Azure, in that you can run up a new server instance in a few minutes. However, there is still manual configuration on the server once launched. Azure web apps (formerly web sites) are better: you just upload your application. Better still, you can scale it by adding or removing instances with a script or through the web-based management portal. Web apps are limited though and for more complex applications you may need full access to the server. Greater ability to automate the server means that the web app experience can become the norm for a wider range of applications.

Nano Server is more efficient. Look at these stats (compared to full Server):

- 93 percent lower VHD size

- 92 percent fewer critical bulletins

- 80 percent fewer reboots

Microsoft has removed not only the GUI, but also 32-bit support and MSI (I presume the Windows Installer services). Nano Server is designed to work well both sides of the hypervisor, either hosting Hyper-V or itself running in a VM.

Microsoft has also improved automation:

All management is performed remotely via WMI and PowerShell. We are also adding Windows Server Roles and Features using Features on Demand and DISM. We are improving remote manageability via PowerShell with Desired State Configuration as well as remote file transfer, remote script authoring and remote debugging.

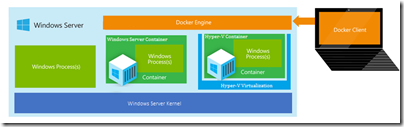

Returning for a moment to ChefConf, the DevOps concept is that you define the configuration of your application infrastructure in code, as well as that for the application itself. Deployment can then be automated. Or you could use the container concept to build your application as a deployable package that has no dependencies other than a suitable host – this is where Microsoft’s other announcement from yesterday comes in, Hyper-V Containers which provide a high level of isolation without quite being a full VM. Or the already-announced Windows Server Containers which are similar but a bit less isolated.

This is the right direction for Windows Server though the detail to be revealed at the Build and Ignite conferences in a few weeks time will no doubt show limitations.

A bigger issue though is whether the Windows Server ecosystem is ready to adapt. I spoke to an attendee at ChefConf who told me his Windows servers were more troublesome than Linux,. Do you use Server Core I asked? No he said, we like to be able to log on to the GUI. It is hard to change the culture so that running a GUI on the server is no longer the norm. The same applies to third-party applications: what will be the requirements if you want to install on Nano Server (no MSI)? Even if Microsoft has this right, it will take a while for its users to catch up.