I realise that Microsoft’s Entity Framework is the most common approach for data access in the .NET world, but I have also always had good results from a simple manual approach using DbConnection, DbCommand and DataReader objects, and like the fact that I can see and control exactly what SQL gets executed. If you prefer using Entity Framework or another abstraction that is fine and please stop reading now!

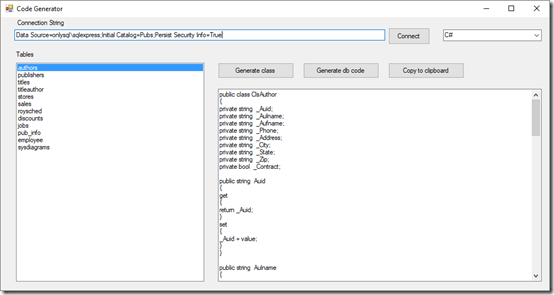

One snag with this more manual approach is that you have to write tedious code building SQL statements. I figured that someone must have written a utility application to generate this code but could not find one quickly so I did my own. It supports both C# and Visual Basic. The utility connects to a database and lets you generate a class for each table along with code for retrieving and saving these objects, ready for modification. Here you can see a generated class:

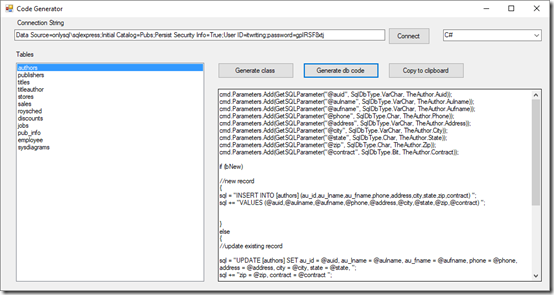

and here is an example of the generated data access code:

This is NOT complete code (otherwise I would be perilously close to writing my own ORM) but simply automates creating SQL parameters and SQL statements.

One of my thoughts was that this code should work well with .NET core. The SQLClient implements the required classes. Here is my code for retrieving an author object, mostly generated by my utility:

public static ClsAuthor GetAuthor(string authorID)

{

SqlConnection conn = new SqlConnection(ConnectString);

SqlCommand cmd = new SqlCommand();

SqlDataReader dr;

ClsAuthor TheAuthor = new ClsAuthor();

try

{

cmd.CommandText = "Select * from Authors where au_id = @auid";

cmd.Parameters.Add("@auid", SqlDbType.Char);

cmd.Parameters[0].Value = authorID;

cmd.Connection = conn;

cmd.Connection.Open();

dr = cmd.ExecuteReader();

if (dr.Read()) {

//Get Function

TheAuthor.Auid = GetSafeDbString(dr, "au_id");

TheAuthor.Aulname = GetSafeDbString(dr, "au_lname");

TheAuthor.Aufname = GetSafeDbString(dr, "au_fname");

TheAuthor.Phone = GetSafeDbString(dr, "phone");

TheAuthor.Address = GetSafeDbString(dr, "address");

TheAuthor.City = GetSafeDbString(dr, "city");

TheAuthor.State = GetSafeDbString(dr, "state");

TheAuthor.Zip = GetSafeDbString(dr, "zip");

TheAuthor.Contract = GetSafeDbBool(dr, "contract");

}

}

finally

{

conn.Close();

conn.Dispose();

}

return TheAuthor;

}

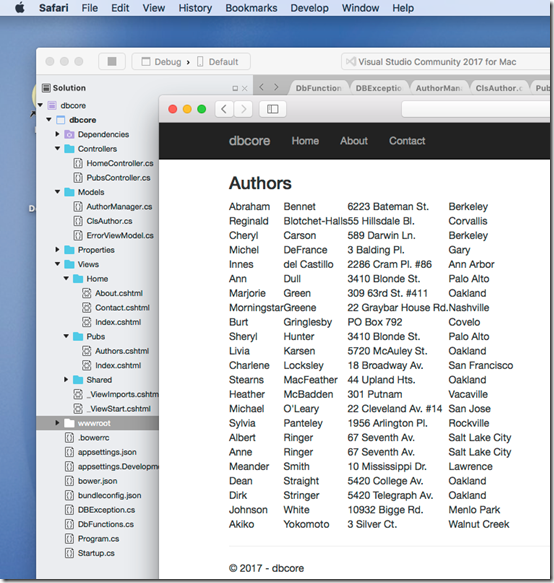

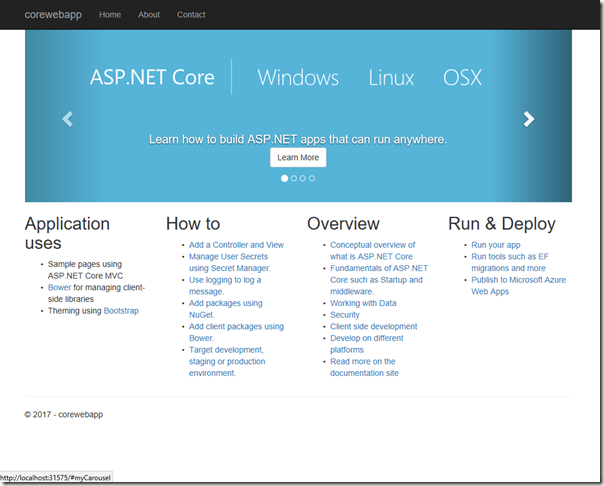

Everything worked perfectly and I soon had a table showing the authors, using ASP.NET MVC.

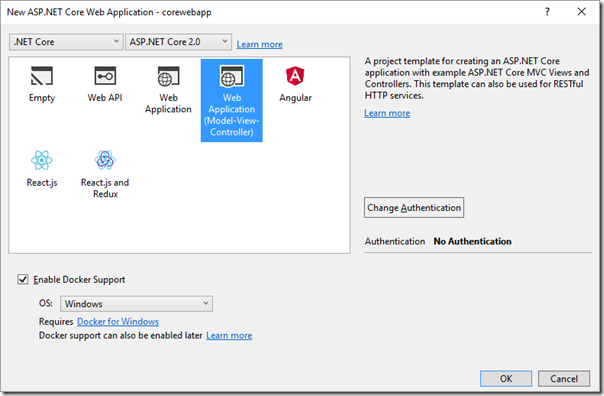

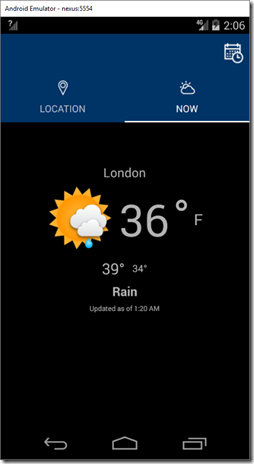

In order to verify that it really does work with .NET Core I moved the project to Visual Studio Mac and ran it there:

I may be unusual; but I am reassured that I have a relatively painless way to write a database application for .NET Core without using Entity Framework.