I am just back from Microsoft’s developer-focused Build event, where some special sessions were laid on for press, on the subject of “Modern journalism.”

Led by Microsoft’s Ben Rudolph, Modern Journalism is described on his public LinkedIn profile as “a new program committed to helping the news industry fight fake news, tell stories that resonate with modern audiences, and succeed financially.”

The sessions appealed to me for one particular reason, which was the promise of automatic transcription. We were given a leaflet which says:

Tired of digging through hours of recordings to find that one quote? When you record a Teams interview, it’s saved to Microsoft Stream. Here you’ll get game-changing AI features: searchable transcript to jump to exact moments a key word or phrase was used.

Before the transcription thing though, we were taken on a tour of OneNote and Word with AI. The latest AI Editor in Word will tighten up your prose and find gaffes like non-inclusive language. There is lack of clarity over the privacy implications (these features work by uploading everything you type to Microsoft) but perhaps it is useful. I make plenty of typographical errors and would welcome help, though I remain sceptical about the extent to which AI can deliver this.

On to transcription though. Just hit record during a voice or video meeting in Teams, Microsoft’s Office 365 collaboration tool, and it gets automatically transcribed.

Unfortunately I do not use Teams for interviews, though it is possible to use it even for in-person interviews by having a meeting of one and recording it. I am wary though. I normally use an external recording device. Many years ago my device failed one day (I forget whether it was battery or something else) and I used my Tablet PC to record an interview with the game inventor Peter Molyneux. My expectations were not particularly high – I just wanted something good enough that I could transcribe it later. Unfortunately the recording was so poor that you can only make out about one word in ten. This, combined with my written notes and memory, was just about sufficient to write up my piece; but it was not an experiment I felt inclined to repeat – though recording quality has improved since that early disaster.

Still, automatic transcription would be an amazing time-saver. Further, I respect what can be achieved. Nuance Dragon Dictate can give superb results after a bit of training. What about Teams?

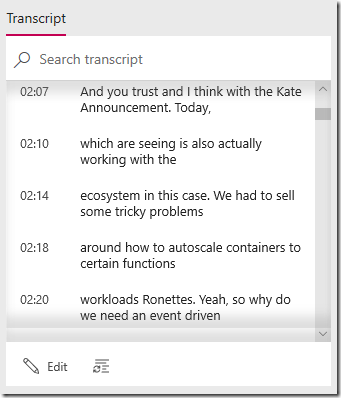

Today I put the idea to the test. I took a recorded interview from Build, made with a dedicated device, and uploaded it to Microsoft Stream. I tried uploading an audio file directly, but it would not accept it. I then created a “video” by importing my audio into a one-slide PowerPoint presentation and exporting it as a video. The quality is fine, easily intelligible. Stream chewed on it for maybe 30 minutes, and then my transcript was ready. The subject was the Azure Kubernetes Service. Here is a snippet of what Stream came up with:

There is an unnecessary annoyance here, which is that you cannot easily select and copy the entire transcript. Notice that it is in short snippets. The best way to get the whole thing is to click the three dots under the video, choose Update Video Details, and then download the caption file.

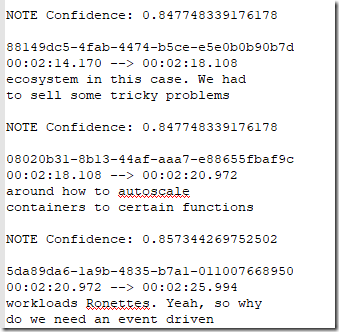

Now you get something like this:

The format is, shall we say, sub-optimal for journalists, though it would not take too long to write a script that would extract the text.

The bigger problem is the actual transcription. The section I have chosen is wrong in an interesting way. Here is part of what was said:

With the KEDA announcement today, what you’re seeing is us working with the ecosystem, in this case Red Hat, to solve some tricky problems around how to autoscale containers.

and here is the transcription:

with

the Kate Announcement. Today, which are seeing is also

actually working with the ecosystem in this case. We had

to sell some tricky problems around how to autoscale containers

Many of the words are correct, but the meaning is scrambled. Red Hat has been transcribed as “we had” losing a critical part of the content.

It is not my intention to rubbish this technology. Automatic transcription is very challenging, especially with specialist content. It is not unreasonable for the system to transcribe KEDA as “Kate”: it is a brand new acronym (Kubernetes-based event-driven autoscaling).

Still, the question I ask myself is whether fixing up the auto transcription will save me any time versus the old-fashioned approach. I use a Word macro that plays back the interview with hot keys to pause and backtrack, editing as I go.

The answer is no. It will take me as long or longer to make sense of the automatic transcription, by comparing it to the original, than to type it from scratch.

This might not always be the case. Perhaps with a more AI-friendly subject the transcription will be good enough to save some time. It could also help to find where in the recording a particular quote appears. So it is not altogether useless.

Transcription is difficult, but there are some simpler matters which Microsoft could improve. Enabling upload of audio files rather than video, and providing a continuous transcript that can easily be copied, for example.

Having a team within Microsoft rooting for journalists strikes me as a good thing in that an internal team may have more influence over the products.

It may be more a matter of some bright spark thinking, hey if we get more journalists using Office 365 that will help to promote the product. A strategy which will be more successful if effort goes into making product fit better with the way journalists actually work.