NVIDIA has announced CUDA 4.0, a major update to its C++ toolkit for general programming on the GPU. The idea is to take advantage of the many cores of NVIDIA’s GPUs for speeding up tasks that may not be graphic-related.

There are three key features:

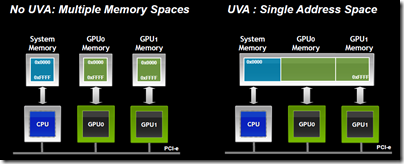

Unified Virtual Addressing provides a single address space for the main system RAM and the GPU RAM, or even RAM across multiple GPUs if available. This significantly simplifies programming.

GPUDIRECT 2.0 is NVIDIA’s name for peer-to-peer communication between multiple GPUs on the same computer. Instead of copying objects from one GPU, to main memory, and to a second GPU, the data can go directly.

Thrust C++ template libraries Thrust is a CUDA library which is similar to the parallel algorithms in the C++ Standard Template Library (STL). NVIDIA claims that typical Thrust routines are 5 to 100 times faster than with STL or Intel’s Threading Building Blocks. Thrust is not really new but is getting pushed to the mainstream of CUDA programming.

Other new features include debugging (cuda-gdb) support on Mac OS X, support for new/delete and virtual functions in C++, and improvement to multi-threading.

The common theme of these features is to make it easier for mortals to move from general C/C++ programming to CUDA programming, and to port existing code. This is how NVIDIA sees CUDA progress:

Certainly I see increasing interest in GPU programming, and not just among super-computer researchers.

A weakness is that CUDA only works on NVIDIA GPUs. You can use OpenCL for generic GPU programming but it is less advanced.

CUDA 4.0 release candidate will be available from March 4 if you sign up for the CUDA Registered Developer Program.