One intriguing new feature in Micrsosoft’s SQL Server 2014 is the ability to create or attach databases whose files are in Azure blog storage. This sounds like something that would not work at all well: why would you want a database engine to mount files located hundreds or thousands of miles away? However, the feature is apparently baked deeply into SQL Server, according to this white paper (which is essential reading if you want to know more):

SQL Server 2014 integration with Windows Azure blob storage occurs at a deep level, directly into the SQL Server Storage Engine; SQL Server Data Files in Windows Azure is more than a simple adapter mechanism built on top of an existing software layer.

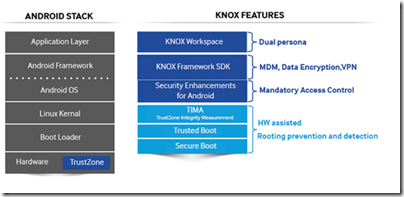

· The Manager Layer includes a new component called XFCB Credential Manager, which manages the security credentials necessary to access the Windows Azure blob containers and provides the necessary security interface; secrets are maintained encrypted and secured in the SQL Server built-in security repository in the master system database.

· The File Control Layer contains a new object called XFCB, which is the Windows Azure extension to the file control block (FCB) used to manage I/O against each single SQL Server data or log file on the NTFS file system; it implements all the APIs that are required for I/O against Windows Azure blob storage.

· At the Storage Layer, the SQL Server I/O Manager is now able to natively generate REST API calls to Windows Azure blob storage with minimal overhead and great efficiency; in addition, this component can generate information about performance counters and extended events (xEvents).

It also seems that the main target usage is SQL Server running on Azure VMs in the same region as the blog storage, removing latency concerns, though the wording of the explanation is curious, implying almost that on-premise connection is supported but should not be:

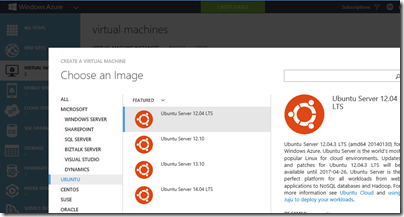

Although it is theoretically possible and officially supported, using an on-premises SQL Server 2014 installation and database files in Windows Azure blob storage is not recommended due to high network latency, which would hurt performance; for this reason, the main target scenario for this white paper is SQL Server 2014 installed in Windows Azure Virtual Machines (IaaS). This scenario provides immediate benefits for performance, data movement and portability, data virtualization, high availability and disaster recovery, and scalability limits.

If you use blob storage in this way on an Azure VM, then I/O goes through the Virtual Network Driver, whereas an Azure data disk uses the Virtual Disk Driver. This nicety may be the main reason to consider the feature.

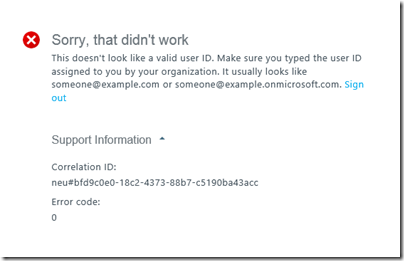

I tried both scenarios: on-premise and from an Azure VM. I had some difficulty getting started, despite this seemingly exhaustive tutorial. I followed it, I thought, to the letter, but got either the error:

Unable to open the physical file "https://myaccount.blob.core.windows.net/sqldata/azuredb.mdf". Operating system error 86: "86(The specified network password is not correct.)".

or else

CREATE FILE encountered operating system error 1117(The request could not be performed because of an I/O device error.) while attempting to open or create the physical file https://myaccount.blob.core.windows.net/sqldata/azuredb.mdf

The problem turned out to relate to the Shared Access Signature required. The supposedly exhaustive tutorial merely refers you to the CloudBlobContainer.GetSharedAccessSignature method in the Azure SDK and offers an incomplete code snippet. I wrote C# code for this and was able to generate a Shared Access Signature but it did not work (see above). I found myself in the depths of the Azure SDK, wondering if I should use version 2.1 or 3.0, and whether I should use Microsoft.WindowsAzure.StorageClient.CloudBlobClient or Microsoft.WindowsAzure.Storage.Blob.CloudBlobClient. The tutorial is also not clear about exactly which part of the Shared Access Signature you should store in the SQL Server Credential Manager; it is a multipart string separated by ampersands.

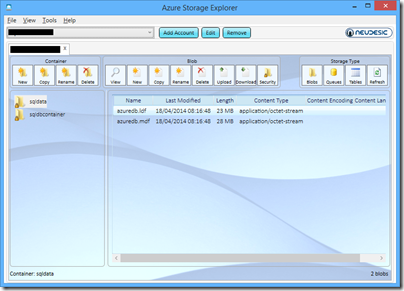

I have still not fully worked it out, but discovered the very helpful Azure Storage Explorer on CodePlex. If you follow the instructions in the white paper referenced above, and use the Azure Storage Explorer to generate the Shared Access Signature, then it works. The project is open source, so with a little effort it should be possible to find and document the exact requirements.

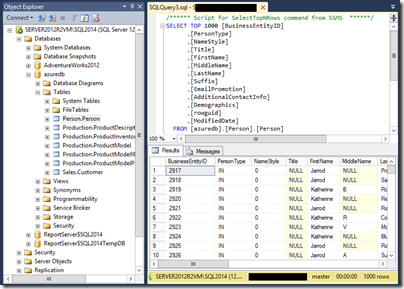

I tried creating and using a database from my on-premise SQL Server 2014 and I find the performance remarkably good, considering. There is no doubt some smart caching going on under the covers. Selecting 1000 rows from a table took 11 seconds the first time, and was instant the second time. It seems to me viable, on my brief look, though I am not sure why you would want to do this. However it is a good demonstration of how cloud and on-premise are coming ever-closer.

Running from an Azure VM in the same region is a different case, though I would suggest detailed and intensive testing before going into production.