I’ve reviewed dozens of wireless headphones and earphones in the last six months, enough that I’m not easily impressed. The B&O HX wireless headphones are an exception; as soon as I heard them I was delighted with the sound and have been using them frequently ever since.

These are premium wireless headphones; they are over-ear but relatively compact and lightweight (285g). They are an upgrade of the previous model, Beoplay H9, with longer battery life (35 hours), upgraded ANC (Active Noise Cancellation), and four microphones in place of two. It is not top of the B&O range; for that you need to spend quite a bit more for the H95. Gamers are directed to the similarly-priced Portal model which has a few more features (Dolby Atmos, Xbox Wireless) but shorter battery life and no case; worth considering as it probably sounds equally good but I do not have a Portal to compare.

What you are paying for with the HX is a beautiful minimalist design and excellent sound quality. The sound is clean, sweet and exceptionally clear, perfect for extended listening sessions. Trying these out is a matter of “I just want to hear how they sound on [insert another favourite track]”; they convey every detail and are superbly tuneful. It is almost easier to describe what they don’t do: the bass is not distorted or exaggerated, notes are not smeared, they are never harsh. Listening to an old favourite like Kind of Blue by Miles Davis you can follow the bass lines easily, hear every nuance of the percussion. Applause on Alison Krauss and the Union Station live sounds like it does at a concert, many hands clapping. Listening to Richard Thompson’s guitar work on Acoustic Classics you get a sense of the texture of the strings plus amazing realism from the vocals. The drama on the opening bars of Beethoven’s 9th symphony, performed by the New York Philharmonic Orchestra conducted by Leonard Bernstein, is wonderfully communicated.

In other words, if you are a hi-fi enthusiast, the HX will remind you of why. I could also easily hear the difference between the same tracks on Spotify, and CD or high-res tracks on my Sony player. AptX HD is supported.

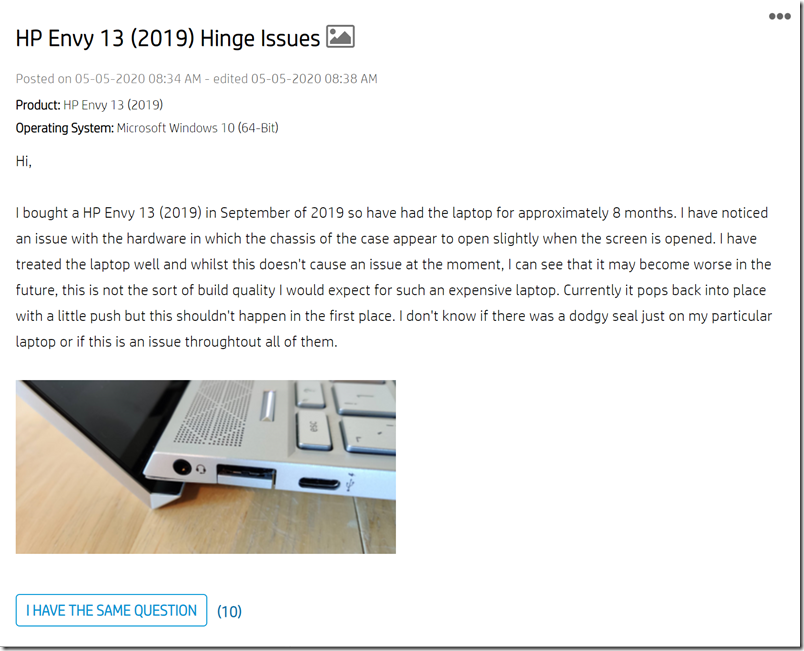

That said, there are unfortunately a few annoyances; not deal-breakers but they must be mentioned. First, the HX has touch controls for play/pause, volume, and skip track. I dislike touch controls because you get no tactile feedback and it is easy to trigger accidentally. With the HX you play/pause by tapping the right earcup; it’s not pleasant even when it works because you get a thump sound in your ear, and half the time you don’t hit it quite right, or you think you haven’t, tap again, then realise you did and have now tapped twice. Swipe for skip track works better, but volume is not so good, you have to use a circular motion and it is curiously difficult to get a small change; nothing seems to happen, then it jumps up or down. Or you trigger skip track by mistake. Ugh.

Luckily there are some real buttons on the HX. These cover on/off, and ANC control, which toggles between on, transparent (hear external sounds) and inactive. There is also a multi-button which activates voice assistants if you use these.

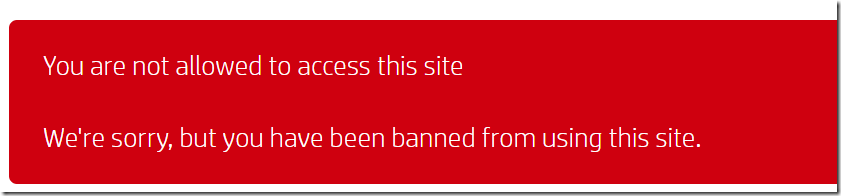

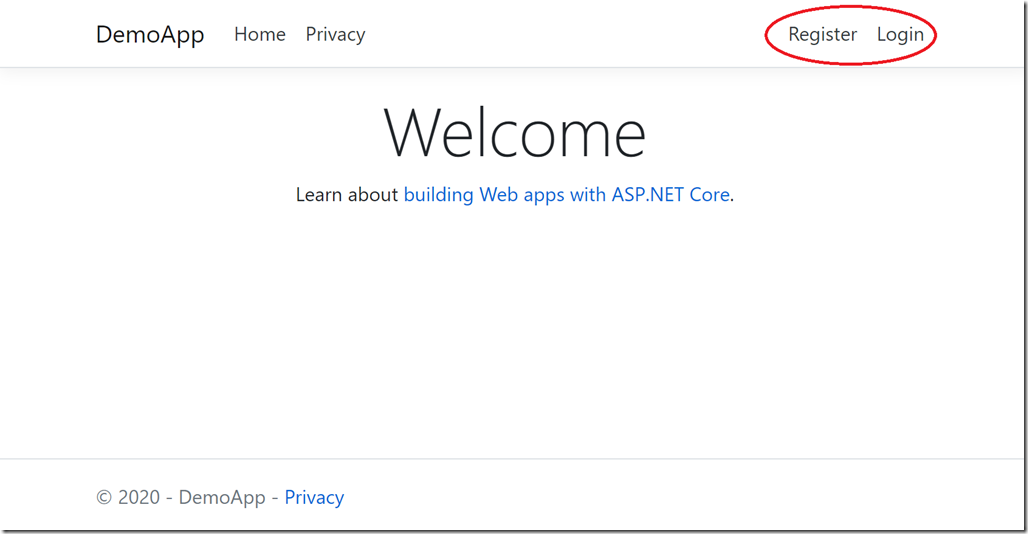

Want to use your headphones? Please sign in

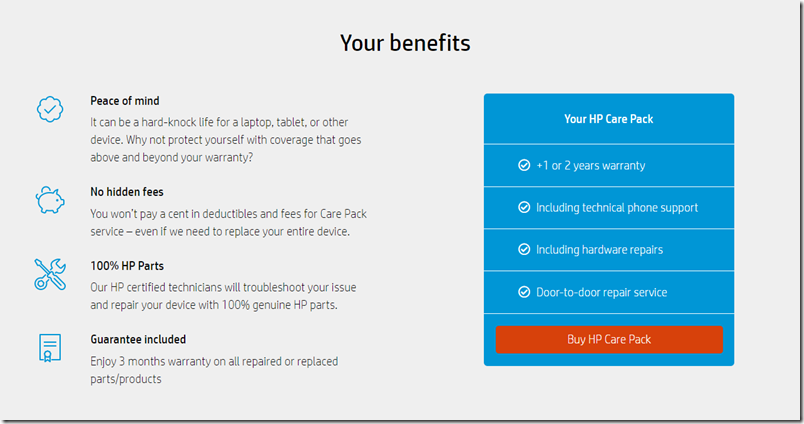

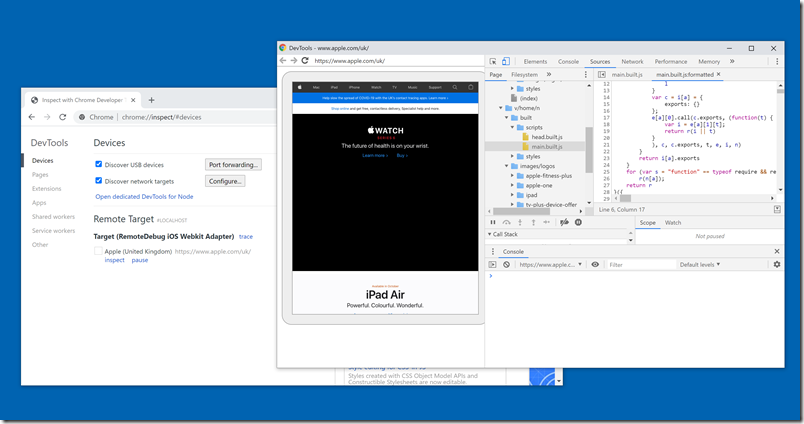

Second, there is an app. I tried it on an iPad. Major annoyance here is that it does not work at all unless you create an account with B&O and sign in. Why should you have to sign in to use your headphones? What are the privacy implications? That aside, I had a few issues getting the app to find the HX, but once I succeeded there are a few extra features. In particular, you can set listening modes which are really custom EQ, or create your own EQ using an unusual graphic controller that sets a balance between Bright, Energetic, Warm and Relaxed. You can also update firmware, set wear detection on or off (pauses play when headphones are removed). Finally, and perhaps most important, you can tune the ANC, though changes don’t seem to persist if you then operate the button. You can also enable Adaptive ANC which is meant to adjust the level of ANC automatically according to the surroundings. It didn’t seem to me to make much difference but maybe it does if you are moving about.

The ANC is pretty good though. I have a simple test; I work in a room with a constant hum from servers and ANC should cut out this noise. It does. Further, engaging ANC doesn’t change the sound much, other than cutting out noise, which is how it should work.

There is a 3.5mm jack connection for wired use, but with two important limitations. First, this does not work at all if the battery is flat; the headphones must be turned on. Second, the jack connection lacks the extra connection that enables use for calling, so for listening only. The sound quality was no better wired, perhaps slightly worse, so of limited value.

Despite a few annoyances I really like these headphones. I doubt I will use the app, other than for firmware updates, and rarely bother with the touch controls, which means I can enjoy the lovely sound, elegant design, good noise cancelling, and comfortable wear.