Pipelines is an Azure service that enables a powerful feature: the ability to set up continuous integration. I have tangled with it before, in the context of trying Azure Kubernetes Service, but managed to avoid getting deep into the YAML which is the language of Pipelines. I am working on a web application and trying to get it up to scratch as quickly as possible, especially as there are now a bunch of users who are being patient over glitches during development but whose patience may run out.

The application uses .NET Core which for the most part is working well for me. I am using Visual Studio 2019, with occasional forays into Visual Studio Code (VS Code), and deploying to a Linux Azure App Service. Everything was fine until one day when the Web Deploy feature in Visual Studio stopped working with “could not complete the request to remote agent … the operation has timed out.” I appealed for help but with no result yet.

All was not lost as I found that the VS Code Deploy to Azure extension worked pretty well. All I needed to do was to open the solution folder in VS Code, run:

dotnet publish -c Release -o ./publish

in the terminal, then right-click the publish folder and then right-click the publish folder and choose Deploy to web app. There are a few annoyances but it solved the immediate problem.

One can do better though. Rather than manually deploying, you can create a pipeline using Azure DevOps (the thing that was once called Visual Studio Online and is the cloud version of Team Foundation Services). An attraction of using Azure Devops is that you get “1 Microsoft-hosted job with 1,800 minutes per month for CI/CD” free which seems decent.

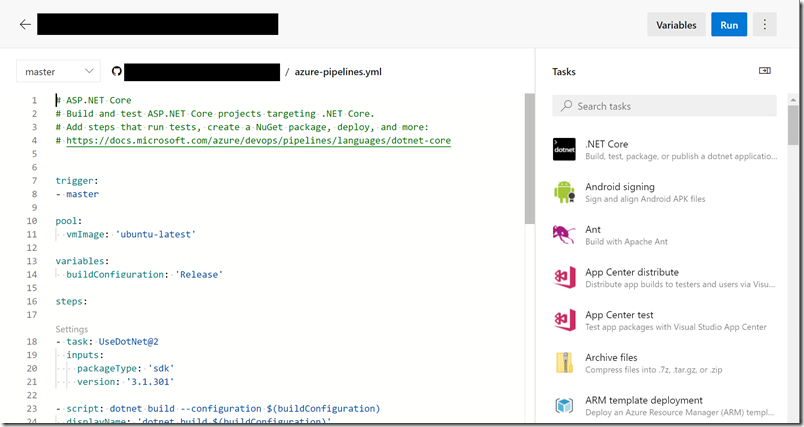

I got started, creating an Azure DevOps project and adding a pipeline. You authorize it to access your GitHub repository (if that is what you have, as I do) and then end up in an editor that looks like this:

I soon got frustrated. The Pipelines service seems fundamentally excellent but spoilt by poor documentation and some odd behaviour – at least for .NET Core. It took me hours to achieve a basic setup that would upload, test and deploy my simple web application. Most of the time was spent observing pipelines fail to run and trying to figure out why.

When you run a pipeline you may notice that it uses .NET Core 2.1 and warns that 2.2 and 3.0 are end of life:

How do you get it to use .NET Core 3.1? You can add a snippet called UseDotNet@2 and specify the version. I put 3.1 and it was rejected as it likes a full version. I put 3.1.301 and it worked .

The most time-consuming thing for me was running tests. The application uses an Azure SQL database. It is unwise to put the database password in appsettings.json in the GitHub respository. How then do you connect to the database? The docs anticipate this and you can use a feature called variable substitution. In the Pipeline editor, you can add variables and mark them secret, so they are not included in logs. You can also use variables from Azure Key Vault. Then you can use the FileTransform@2 task to replace the connection string in appsettings.json with the one you need including the password. I do not think this is ideal from a security perspective – you are still putting the password in plain text in a configuration file – but it beats having it in the GitHub repository.

I had many issues. The main documentation on variable substitution is here. This is terrible. Note that if you look at the YAML example for JSON file substitution (which is what we need) it does not even use FileTransform@2. It uses AzureRmWebAppDeployment@4 which does a whole lot of other stuff as described here. Maybe I should have tried that. But FileTransform@2 looked like the right thing. Unfortunately it generally gives the error “Cannot perform XML transformations on a non-Windows platform.” No, I am not trying to do an XML transformation. Even if you specify the fileType as json and set enableXmlTransform to false, you still get the error. Later research suggests you can beat this error by setting xmlTransformationRules to an empty string. I gave up though and used FileTransform@1 (an older version of the task) which works as expected.

I still did not get the result I wanted though. All the tests using the database failed. Eventually I figured out that I had to set the folderPath to $(Build.SourcesDirectory). Then it works.

This was good. Now my tests run in Linux rather than on Windows, matching the deployed environment. In a full production environment I would use a second Azure SQL database for the tests, but for development this will do.

I then created a staging slot in the App Service and added a deployment step to deploy the application to that slot. Again, this is good. The application will not deploy unless it passes all the tests (this is a built-in feature of Pipelines, as each step does not run unless the previous steps succeeded). It deploys to staging which has a separate URL so you can try it out and not swap it to production until you are ready.

Overall, it is a better solution than the Visual Studio web deploy which it replaces, so perhaps the error did me a favour. It will work with Visual Studio as well as with VS Code, since it triggers automatically on every code commit. The Publish option in Visual Studio becomes redundant.

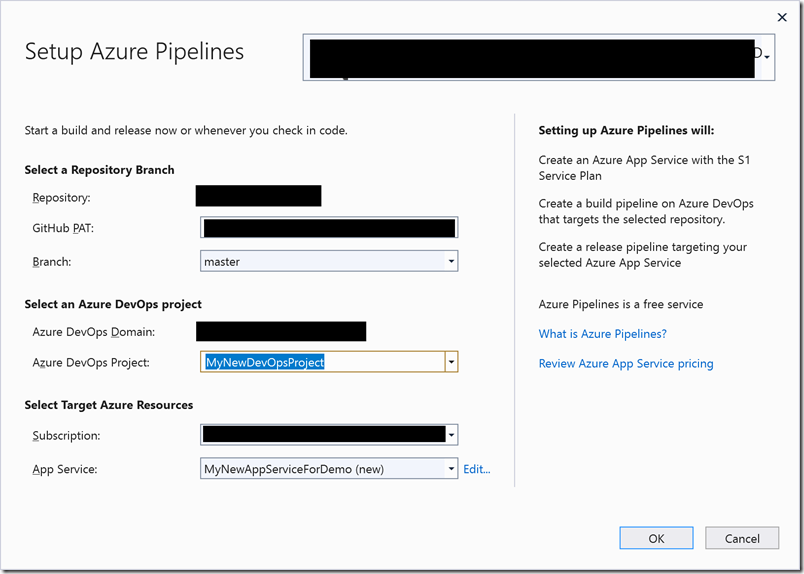

Note that Visual Studio also has an option to set this up automatically.

I tried it, letting the wizard do what it wanted including creating a new Azure DevOps project and a new App Service plan. Notable things:

– It created a pipeline using the Classic UI rather than the YAML based editor

– It uses an agent (the VM where the pipeline runs) called vs2017-win2016

– the pipeline did not get very far, failing on NuGet restore

No, I am not going to bother troubleshooting this.

This time yesterday I hated Azure DevOps pipelines. Nothing worked first time, YAML is a hostile editing environment (whitespace matters), and the documentation frustrated me. Now I feel pleased and I have this nice badge in my repository.

I am left with a nagging feeling though that all this is more difficult than it should be. It seems to me that what I wanted to do was commonplace: use .NET Core, use Azure App Service, have my pipeline build the project, run tests and deploy to staging. You could add, apply entity framework migrations in many cases. I did not find this documented in any one place and the result was that it took more time to figure out.