I have just installed an entire Windows server setup on a single cheap box. It goes like this. Take one budget server stuffed with 8GB RAM and two network cards. Install Server 2008 with the Hyper-V and Active Directory Domain Services, DNS and DHCP. Install Server 2003 on a 1GB Hyper-V VM for ISA 2006. Install Server 2008 on a 4GB VM for Exchange 2007. Presto: it’s another take on Small Business Server, except that you don’t get all the wizards; but you do get the flexibility of multiple servers, and you do still have ISA (which is missing from SBS 2008).

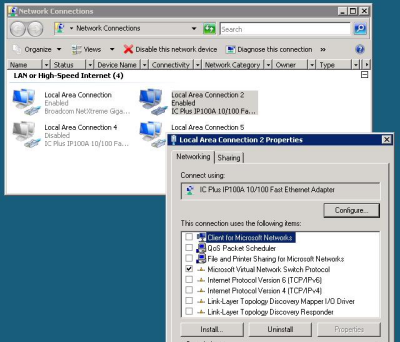

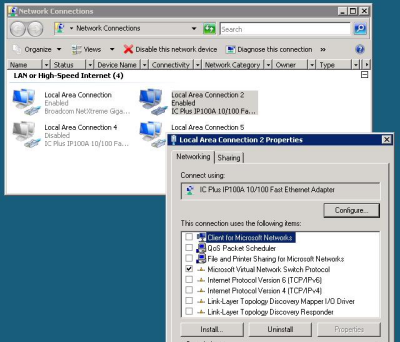

Can ISA really secure the network in a VM (including the machine on which it is hosted)? A separate physical box would be better practice. On the other hand, Hyper-V has a neat approach to network cards. When you install Hyper-V, all bindings are removed from the “real” network card and even the host system uses a virtual network card. Hence your two NICs become four:

As you may be able to see if you squint at the image, I’ve disabled Local Area Connection 4, which is the virtual NIC for the host PC. Local Area Connection 2 represents the real NIC and is bound only to “Microsoft Virtual Network Switch Protocol”.

This enables the VM running ISA to use this as its external NIC. It strikes me as a reasonable arrangement, surely no worse than SBS 2003 which runs ISA and all your other applications on a single instance of the OS.

Hyper-V lets you set start-up and shut-down actions for the servers it is hosting. I’ve set the ISA box to start up first, with the Exchange box following on after a delay. I’ve also set Hyper-V to shut down the servers cleanly (through integration services installed into the hosted operating systems) rather than saving their state; I may be wrong but this seems more robust to me.

Even with everything running, the system is snoozing. I’m not sure that Exchange needs as much as 4GB on a small network; I could try cutting it down and making space for a virtual SharePoint box. Alternatively, I’m tempted to create a 1GB server to act as a secondary domain controller. The rationale for this is that disaster recovery from a VM may well be easier than from a native machine backup. The big dirty secret of backup and restore is that it only works for sure on identical hardware, which may not be available.

This arrangement has several advantages over an all-in-one Small Business Server. There’s backup and restore, as above. Troubleshooting is easier, because each major application is isolated and can be worked on separately. There’s no danger of notorious memory hogs like store.exe (part of Exchange) grabbing more than their fair share of RAM, because it is safely partitioned in its own VM. After all, Microsoft designed applications like Exchange, ISA and SharePoint to run on dedicated servers. If the business grows and you need to scale, just move a VM to another machine where it can enjoy more RAM and CPU.

I ran a backup from the host by enabling VSS backup for Hyper-V (requires manual registry editing for some reason), attaching an external hard drive, and running Windows Server backup. The big questions: would it restore successfully to the same hardware? To different hardware? Good questions; but I like the fact that you can mount the backup and copy individual files, including the virtual hard drives of your VMs. Of course you can also do backups from within the guest operating systems. There’s also a snag with Exchange, since a backup like this is not Exchange-aware and won’t truncate its logs, which will grow infinitely. There are fixes; and Microsoft is said to be working on making Server 2008 backup Exchange-aware.

Would a system like this be suitable for production, as opposed to a test and development setup like mine? There are a couple of snags. One is licensing cost. I’ve not worked out the cost, but it is going to add up to a lot more than buying SBS. Another advantage of SBS is that it is fully supported as a complete system aimed at small businesses. Dealing with separate virtual servers is also more demanding than running SBS wizards for setup, though I’d argue it is actually easier for troubleshooting.

Still, this post is really about Hyper-V. I’ve found it great to work with. I had a few hassles, particularly with Server 2003 – I had to remember my Windows keyboard shortcuts until I could get SP2 and Hyper-V Integration Services installed. Once installed though, I log on to the VM using remote desktop and it behaves just like a dedicated box. The performance overhead of using a VM seems small enough not to be an issue.

I’ve found it an interesting experiment. Maybe some future SBS might be delivered like this.

Update: I tried reducing the RAM for the Exchange VM and it markedly reduced performance. 4GB seems the best spot.