Warning: this post is about old Windows hassles; I’ve written it partly because some of us still need to run old versions of Windows and apps, and partly because it reminds me that Windows has in fact improved so that this sort of thing is less common, though there is still immense complexity under its surface which can leak out to cause you grief – especially for people like reviewers and developers who install lots of stuff.

I’ve been retreating to Windows XP recently, in order to tweak an old Visual Basic 6 application. VB6 can be persuaded to run on later versions of Windows, but it is not really happy there. I have an old XP installation that I migrated from a physical machine to a VM on Hyper-V.

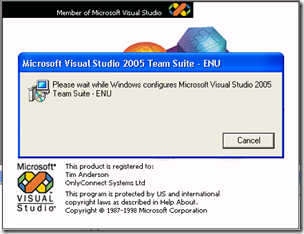

I was annoyed to find that when I fired up VB 6, the Windows Installer would pop up – not for VB 6, but for Visual Studio 2005, which was also installed.

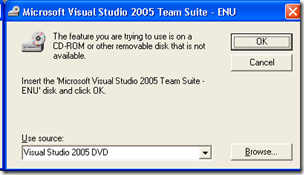

Worse still, after thrashing away for a bit it decided that it needed the original DVD:

I actually found the DVD and stuck it in. The installer ground away for ages with its deceptive progress bars – “20 seconds remaining” sitting there for 10 minutes – repeated what looked like a loop several times, then finally let me in to VB. All was well for the rest of that session; but after restarting the machine, if I started VB 6 the very same thing would happen again.

This annoyance is not confined to VB 6; it used to happen a lot in XP days, though in my experience it is much less common with Vista and Windows 7.

I investigated further. This article explains what happens:

What you see is the auto-repair feature of Windows Installer. When an application is launched, Windows Installer performs a health check in order to restore files or registry entries that may have been deleted. Such a health check is not only triggered by clicking a shortcut but also by other events, such as activation of a COM server. The events triggering a health check depend on the operating system.

When you see this auto-repair problem this means that Windows Installer came to the conclusion that some application is broken and needs to be repaired.

A good concept, but in practice one that often fails and causes frustration. The worst part of it is the lack of information. Look at the dialog above, which refers to “the feature you are trying to use”. But which feature? In my case, how can my VB 6 depend on a feature of Visual Studio 2005, which came later and does not include VB 6? In any case, it is a lie, since VB 6 works fine even after the installer fails to fix its missing feature.

Fortunately, the article explains how to troubleshoot. You go to the event viewer, application tab, and MsiInstaller entries will tell you which product and component raised the repair attempt. Unfortunately the component is identified by a GUID. What is it?

To find out, you can try Google, or you can use a utility that queries the Windows Installer database. The best I’ve found is a tool called msiinv; the script mentioned in the post above did not work. You can find msiinv described by Aaron Stebner here, with a download link. Note how Stebner had to change the download locations because they kept breaking; a constant frustration with troubleshooting Windows, as Microsoft regularly moves or removes articles and downloads even when they are still useful.

Running msiinv with its verbose option (which you will need) seems to pretty much dump the entire msi installer database to a text file. You can then search for these GUIDs and find out what they are. You may find even products listed that are not in Control Panel’s Add/Remove programs. You can remove these from the command line like this:

msiexec /x {GUID}

where GUID identifies the product to remove.

In my case I found beta versions of WinFX (which became .NET 3.0). I said this was old stuff! I removed them, restarted Windows, and VB6 started cleanly.

That still does not explain how they got hooked to VB6; the answer is probably somewhere in the msiinv output, but having fixed the issue I’m not inclined to spend more time on it.