Yes, mobile is the future of client applications, cross-platform is cool, web applications are amazing; but out there in the real world, there are still a ton of people who work all day with a Windows PC, and businesses that want PC applications in order to get their work done.

So when a business comes to you and says, we want a new Windows application to do this or that, and presuming they do not care about mobile or Macs or access over the internet but just want something that runs on their internal network, what framework do you choose?

Let us even assume that they all run Windows 10 so that UWP (Universal Windows Platform) is a realistic option.

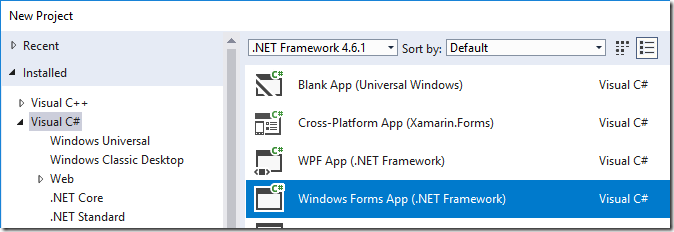

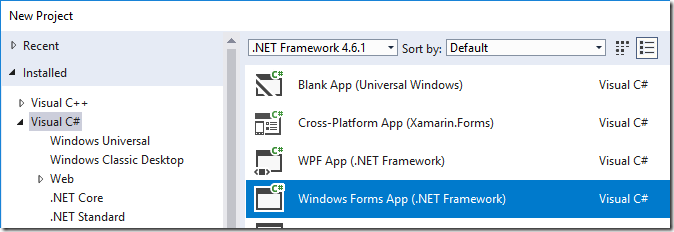

If you want to code in .NET (which is a great choice for a Windows-only application, and with the possibility of migrating code to cross-platform via Xamarin’s compiler later), then you have three obvious choices:

Windows Forms

This is the framework for Windows desktop applications that was introduced at the same time as .NET itself, back in 2002. Of course it has been revised many times since. There was a big update in 2006 with .NET 2.0. That said, Microsoft intended it to be replaced by Windows Presentation Foundation (WPF, see below), so it has not been a focus of attention. In 2014, High DPI support was improved, with .NET 4.5.2, reflecting the fact that this ancient framework is still widely used.

Windows Forms is a nice wrapper around the Windows API, and easy to use in that it uses essentially X Y layout. In other words, you can think of your form as a grid of pixels with the position of your controls determined at design time by its size and coordinates. This is great if you are designing and running on the same PC, but not so good when you deploy to other PCs with different display settings. It does kind-of scale if you follow certain rules, but successful scaling in a Windows Forms application is often difficult to achieve, so users may suffer chopped-off controls and text, or just ugly screens. Read this carefully if you use Windows Forms. And then read about High DPI support, which was improved again in .NET Framework 4.7.

If you are writing a database application, you can generate datasets by drag and drop from the Server Explorer in Visual Studio and bind them to controls. I am not a fan of this database framework, which quickly gets convoluted, but you do not have to use it. However the ability to bind list and grid controls to any kind of .NET collection is fantastically useful.

Why is Windows Forms still in use? It is partly legacy and the fact that it is easier to maintain and enhance an existing application than to start again. It is also because, scaling issues aside, Windows Forms is reliable, well supported by both built-in and third-party controls, and easy to learn.

Windows Presentation Foundation

This was Microsoft’s second go at a GUI framework for .NET and in many respects a great improvement. It was introduced with .NET Framework 3.0 in 2006, part of the Vista wave of technology. Unlike Windows Forms, it is based on the DirectX graphics API, so great for multimedia and special effects. Scaling is built-in and based on layout managers. The underlying presentation language is based on XAML, an XML language. As with Windows Forms, there is deep support for binding data to controls.

Why would you not always use WPF rather than Windows Forms? The main issue is that the time you save on figuring out scaling is more than consumed by the time you spend on design. WPF is a designer-centric framework. It will repay your efforts, but if you just want to slap a couple of grids and a few buttons on a form to get a working business application, Windows Forms remains tempting.

Universal Windows Platform

Both Windows Forms and WPF are old, and Microsoft is pointing developers towards its Universal Windows Platform (UWP) instead. UWP is an evolution of the new application platform introduced in Windows 8 in 2012. If WPF was all about scaling and multimedia, the Windows 8 modern app platform is about touch support and Store-based deployment. The application model was also service based, the idea being that your app consumes services published over the internet. Until the Windows 10 Fall Creators Update, you could not use the .NET SQLClient to connect directly to a SQL Server database (you can now). The app platform became UWP with the launch of Windows 10 in 2015. UWP can use XAML for layout design, but it is not compatible with WPF.

Personally I have mixed feelings about UWP. Unfortunately it has suffered from Microsoft’s ever-changing development strategy. The Windows 8 app platform made sense to me as a way of bringing Windows into the tablet era and enabling applications that were more secure and more easily deployed, even if it tended to result in applications that were blocky and ugly. Microsoft then changed its mind about full-screen touch applications and came up with the UWP for Windows 10, where applications again run in a window, but with a new selling point: you could run your application on Windows Phone as well as desktop. Then the company canned Windows Phone, before UWP had properly launched, in effect deleting the “Universal” part of the platform.

UWP still offers Store delivery and isolation from other applications, better for security and stability. However there are a few things against it. First, users require Windows 10. Second, like WPF it is a designer-centric platform and not so good for running up quick business applications. Third, UWP apps behave differently from standard desktop applications, sometimes not in a good way.

I was using Microsoft’s bundled Photos application recently. I work a lot with images so this often pops up, as the default image viewer on Windows 10. I was not stressing it, but it crashed which, as is typical for a UWP app, means it just disappeared without any message or warning.

UWP will be three years old this summer, but I am not convinced that the platform is quite there yet. I find it hard to think of UWP apps that I love. The apps I know best are the built-in ones, Mail, Photos, Groove Music, Calculator, and I do not love any of them. Paint 3D is amazing but not my thing.

At the same time I do see the merits of UWP versus traditional Windows application deployment. The existence of the Desktop Bridge (formerly Project Centennial) means you can get many of those benefits while still using WPF or Windows Forms.

Closing thoughts

Perhaps something like Power Apps will render this discussion irrelevant before long. There are also other options for the desktop, such as Xamarin Forms if you still want to use .NET, or Electron for using web technologies for desktop applications.

Still, while it may seem surprising, even in 2018 I can think of reasons why you might use any of the above frameworks, even Windows Forms, for a business app targeting Windows.