I have been writing a Facebook application hosted on Microsoft Azure. I hit a problem where my application worked fine on the local development fabric, but failed when deployed to Azure. The application was not actually crashing; it just did not work as expected. Specifically, either the Facebook authentication or the ASP.NET Forms Authentication was failing; when I tried to log on, the log on failed.

This scenario, where the app works locally but not on Azure, is potentially a bad one because you do not have the luxury of breakpoints and variable inspection. There are several approaches. You can have the application write a log, which you could download or view by using Remote Desktop to the Azure instance. You can have the application output debug messages to HTML. Or you can use IntelliTrace.

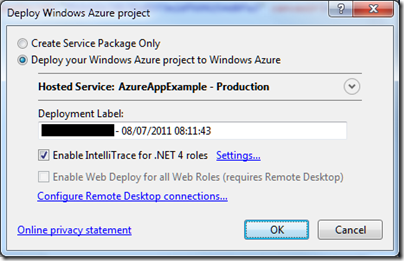

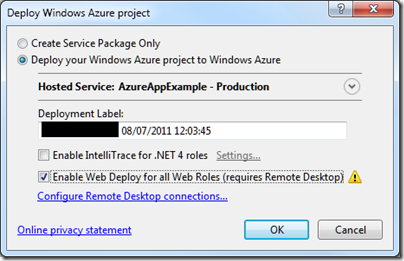

I tried IntelliTrace. It is easy to set up, just check the box when deploying.

Once deployed, I tried the application. Clicked the Log On button, after which the screen flashed but still asked me to Log On. The log on had failed.

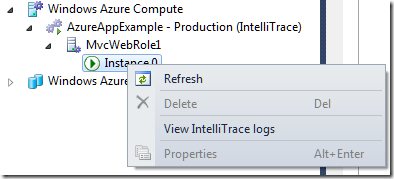

I closed the app, opened Server Explorer in Visual Studio, drilled down into the Windows Azure Compute node and selected View IntelliTrace Logs.

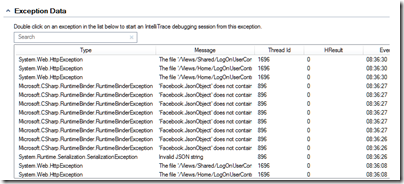

The logs took a few minutes to download. Then you can view is the IntelliTrace log summary, which includes a list of exceptions. You can double-click an exception to start an IntelliTrace debug session.

Useful, but I still could not figure out what was wrong. I also found that IntelliTrace did not show the values for local variables in its debug sessions, though it does show exceptions in detail.

Now, if you really want to debug and trace an Azure application you had better read this MSDN article which explains how to create custom debugging and trace agents and write logs to Azure storage. That seems like a lot of work, so I resorted to the old technique of writing messages to HTML.

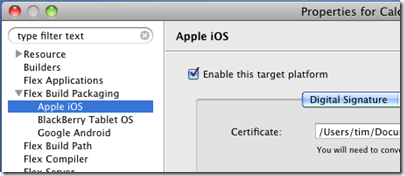

At this point I should mention something you must do in order to debug on Azure and remain sane. This is to enable WebDeploy:

It is not that hard to set up, though you do need to enable Remote Desktop which means a trip to the Azure management portal. In my case I am behind a firewall so I needed to configure Web Deploy to use the standard SSL port. All is explained here.

Why use Web Deploy? Well, normally when you deploy to Azure the service actually builds, copies and spins up a new virtual machine image for your app. That process is fundamental to Azure’s design and means there are always at least two copies of the VM in existence. It is also slow, so if you are making changes to an app, deploying, and then testing, you will spend most of your time waiting for Azure.

Web Deploy, by contrast, writes to your existing instance, so it is many times quicker. Note that once you have your app working, it is essential to deploy it properly, since Azure might revert your app to the last VM you created.

With Web Deploy enabled I got back to work. I discovered that FormsAuthentication.SetAuthCookie was not working. The odd thing being, it worked locally, and it had worked in a previous version deployed to Azure.

Then I began to figure it out. My app runs in a Facebook canvas. Since the app is served from a different site than Facebook, cookies may be rejected. When I ran the app locally, the app was in a different IE security zone, so different rules applied.

But why had it worked before? I realised that when it worked before I had used Google Chrome. That was it. IE worked locally; but only Chrome worked when deployed.

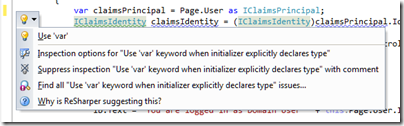

I have given up trying to fix the specific problem for the moment. I have dug into it a little, and discovered that cookie handling in a Facebook canvas with IE is a long-standing problem, and that the Facebook C# SDK may have bugs in this area. It is not essential for my sample; I have found I can get by with the Facebook session. To get the user ID, for example:

FacebookWebContext.Current.Session.UserId

The time has not been wasted though as I have learned a bit about Azure debugging. I was also amused to discover that my Azure VM has activation problems: