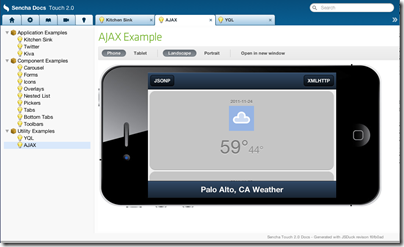

I spoke to Michael Mullany, CEO of Sencha, a company which creates HTML5 frameworks and tools for desktop and mobile browsers. Ext JS is aimed at desktop browser applications, while Sencha Touch is for mobile devices, currently Apple iOS, Google Android and Blackberry 6+. Sencha’s tools include Ext Designer, a visual application builder for Ext JS, and Sencha Animator, a designer for CSS 3 animations. Sencha Touch apps can also be packaged as native apps for iOS or Android.

At its developer conference in Austin USA earlier this month, attended by around 600, the company announced Sencha.io, a cloud service for mobile web apps, as well as presenting Sencha Touch 2.0, a major update.

Mullany talks on his blog about “The Big App Rewrite”:

It’s a world where HTML5 powers the client apps, and they’re enriched with local APIs that execute on everything from traditional desktops to Smart TV’s. And cloud services provide the fabric that enables continuous, shared experiences across the diversity of end-devices. We think this is the platform for the web.

Sencha is perfectly in tune with the trends towards cloud, HTML5 and mobile, which is why I was keen to speak to Mullany. I asked him to contrast Ext JS and Sencha Touch with JQuery and JQuery Mobile.

JQuery is a pretty tiny library that helps with Dom abstraction and animations but that’s it. JQuery UI gives you some visual components as well but Ext-JS is the full enchilada. It’s supposed to be the web equivalent of Cocoa or the Microsoft Windows presentation foundation. It’s got an event system, a theming system, a very rich set of user interface controls, its object oriented, and it’s got a complex layout system so you can build nested layouts that have very complex event handling among different parts of the user interface. We’ve seen user interfaces that have several thousand data elements on a page.

It also has a model-view-controller architecture library on the client side so you can structure your code properly for large applications, it’s got a theming system so you can variable-ise your colours, shapes and look and feel very easily. It also has a full data package so you can do very rich data manipulation on the client, bind data in various complex ways across variables, it’s very different than J query.

And just like you’d probably never use Ext-JS on a public web page, you’d never use JQuery to build something like Marketo or Salesforce VisualForce or a Documentum content management system, all of which use Ext-JS. Ext-JS is one of the most popular behind the firewall development libraries for desktop development.

Now on the mobile side the difference is slightly less. JQuery mobile does give you a set of user interface widgets, but the difference is also similar to the desktop … Sencha Touch is designed to let you do anything you could do with Cocoa Touch or an Android SDK or a Windows Mobile SDK. Its intent is to equip you to develop native quality experiences with native style interaction, things like fixed user interface chrome, multiple independent scrollable areas, nested layouts, those kinds of capabilities.

Our performance tends to be better cross-platform, we’ve done more performance work, we have our theming system, we have an MVC library, we have a templating system. With JQuery mobile you tend to want to add multiple things together and you can certainly assemble a collection of things that will look like Sencha Touch, but Sencha Touch is designed to be integrated, everything is designed to work the same, and the general feedback is that even though Sencha Touch is a much richer system that takes some insight to learn, you get better applications out of it.

I also asked about the new cloud service, Sencha.io. A notable feature is that according to Mullany developers do not have to touch the code that runs on the cloud, they just call its API from the client:

We call it the first client-centric HTML5 cloud, which is a set of authentication, data, data synchronisation, and geo-location services that help people build mobile applications without needing to write server side code. So you literally write your client side application in HTML 5 using Sencha SDKs and then you store your user’s data and you store your user’s authentication credentials in our cloud. You don’t have to worry about mucking around with anything from Ruby on Rails to PHP to Java, it’s all abstracted behind these very clean APIs. We think that’s the future of mobile development, that you’ll have these very thin abstracted server-side services, and and these very rich mobile clients that have off-line state and local data storage powered by HTML5. We think that model is the future of mobile web development and we obviously hope that Sencha.io will be the most popular back-end.

Sencha’s frameworks are open source and dual-licenced. You can use both Touch and Ext JS freely under the terms of the GPL v3. There is also free commercial licencing for Sencha Touch, while commercial licences for Ext JS are paid for. Sencha also has commercial tools, and I asked Mullany to describe the tool products:

We really see the three legs of the business being cloud, tools, and SDKs. We just did a preview of the Sencha Designer 2.0 release at our conference. That has support for Sencha Touch in it so you can drag and drop Sencha touch applications together and then actually package them from within the tool. The intent is also to allow you to hook up to cloud APIs from within the tool as well so it is an integrated, easy-to-use visual application builder for both desktop and touch. So that’s targeted at developers.

Sencha Animator is a little bit different. There’s no JavaScript in it really at all. It is a pure CSS 3 animation tool, and it is a traditional visual timeline with keyframe manipulation, and a style visual editor for creating rich animations.

The market we’re targeting for that is people doing interactive brand advertising on mobile. That’s where you have ubiquitous support for CSS 3 animations that are hardware accelerated so they tend to be the best performance. It’s also very web content friendly so you don’t have to write your application in Sencha Touch just to use Animator, it’s pure CSS output that you drop into whatever piece of content that you want to build.

The reason we built it is because we saw people flailing around for an alternative to doing Flash ads on mobile. Because Flash was banned from iOS, it meant that a whole segment of rich advertising that was based on Flash for the desktop had nowhere to go. They weren’t going to build native iOS applications, it had to be web. So the question then was what do you build it in, do you use JavaScript animation, do you use SVG, do you use Canvas, do you use some of the other graphic technologies such as Web GL? The answer is that CSS 3 is really the highest performance and cognitively pretty easy to wrap your head around.

People “flailing around for an alternative to doing Flash ads?” Mullany has his own agenda, but his comments do highlight the problems caused for Adobe by the success of Flash-free iPhone and iPad. I cannot help thinking that Sencha would be an attractive acquisition for Adobe or certain other companies, but I am sure smarter people than myself have thought of that.

Post sponsored by Monster for the best in IT jobs.