Cross-platform development is a big deal, and will continue to be so until a day comes when everyone uses the same platform. Android? HTML? WebKit? iOS? Windows? Maybe one day, but for now the world is multi-platform, and unless you can afford to ignore all platforms but one, or to develop independent projects for each platform, some kind of cross-platform approach makes sense, especially in mobile.

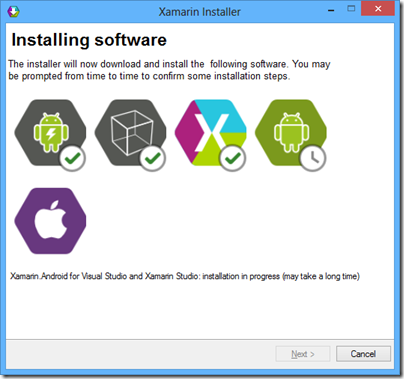

Sometimes I hear it said that there are essentially two approaches to cross-platform mobile apps. You can either use an embedded browser control and write a web app wrapped as a native app, as in Adobe PhoneGap/Cordova or the similar approach taken by Sencha, or you can use a cross-platform tool that creates native apps, such as Xamarin Studio, Appcelerator Titanium, or Embarcardero FireMonkey.

Within the second category though, there is diversity. In particular, they vary concerning the extent to which they abstract the user interface.

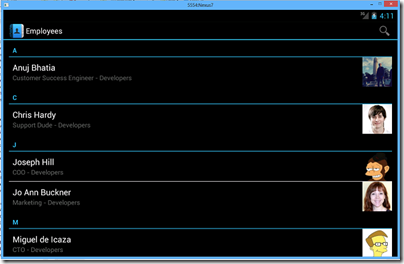

Here is the trade-off. If you design your cross-platform framework to include user interface widgets, like labels, buttons, grids and menus, then you can have your application work almost the same way on every platform. You can also have tools that build the user interface once for all the platforms. This is a big win in terms of coding effort. If the framework is well implemented, it will still adopt some of the characteristics native to each platform so that it looks more or less native.

Some tools do this by drawing their own controls. Embarcadero FireMonkey is in this category. Another approach is to use native controls where possible (in other words, to call the API that shows a button, rather than drawing the button with the graphics API), but to use custom drawing where necessary, even sometimes implementing a control from one platform on another. The downside is that because those controls are not in fact native, there will be some differences, perhaps obvious, perhaps subtle. Martin Fowler at ThoughtWorks refers to this as the uncanny valley and argues against emulated controls.

Further, if you are sharing the UI design across all platforms, it is hard to make your design feel equally right in all cases. It might be better to take the approach adopted by most games, using a design that is distinctive to your app and make a virtue of its consistency across platforms, even though it does not have the native look and feel on any platform.

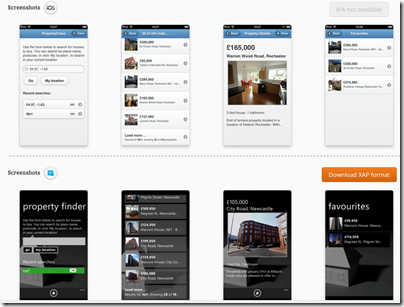

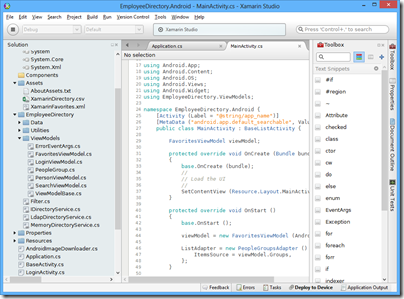

Xamarin Studio on the other hand makes no attempt to provide a shared GUI framework:

We don’t try to provide a user interface abstraction layer that works across all the platforms. We think that’s a bad approach that leads to lowest common denominator user interfaces.*

CEO Nat Friedman told me. He is right; but the downside is the effort involved in maintaining two or more user interface designs for your app.

This is an old debate. One of the reasons IBM created Eclipse was a disagreement with Sun over the best way to design a cross-platform user interface framework. Sun’s Swing framework, derived from Netscape’s Internet Foundation Classes first released in 1996, takes the custom-drawn approach, which is why Swing apps always look like Swing apps (even if you apply the “Windows” look and feel). A team from IBM, some originally from Object Technology International which was a company acquired by IBM, believed it was better to wrap native controls with a Java abstraction layer, created SWT (Standard Widget Toolkit) to do that, and used it to build Eclipse.

Personally I am wary of toolkits which rely heavily on custom-drawn controls rather than native controls, though I see their value. On the other hand, Xamarin Studio is so far in the other direction that it removes some of the benefit of a cross-platform framework.

My prediction is that Xamarin will come up with its own GUI abstraction framework in future, along the lines of SWT. It is a compromise; but one which delivers a lot of value to developers who want to create cross-platform apps with the maximum amount of shared code.

*I have never understood this use of the term “lowest common demominator”. The LCD in maths is the lowest number into which a specific group of numbers divide exactly, so it is an elegant thing. In cross-platform what you should strive for is the highest common intersection: to make available all the features common to each platform.

Update: in April 2014 Xamarin announced Xamarin Forms, a GUI framework which wraps native controls in a XAML implementation (XAML is the presentation language also used by Microsoft, for WPF, Silverlight, Windows Phone and Windows Runtime (Windows 8) apps. There is a quick hands-on here.