Earlier this month I attended a three-day press briefing on what is coming in the R2 wave of Microsoft’s server products: Windows Server, System Center and SQL Server.

There is a ton of new stuff, too much for a blog post, but here are the things that made the biggest impression.

First, I am beginning to get what Microsoft means by “Cloud OS”. I am not sure that this a useful term, as it is fairly confusing, but it is worth teasing out as it gives a sense of Microsoft’s strategy. Here’s what lead architect Jeffrey Snover told me:

I think of it as a central organising thought. That’s our design centre, that’s our north star. It’s not necessarily a product, it goes across some things … for example, I would absolutely include SQL [Server] in all of its manifestations in our vision of a cloud OS. Cloud OS has two missions. Abstracting resources for consumption by multiple consumers, and then providing services to applications. Modern applications are all consuming SQL … we’re evolving SQL to the more scale-out, elastic, on-demand attributes that we think of as cloud OS attributes.

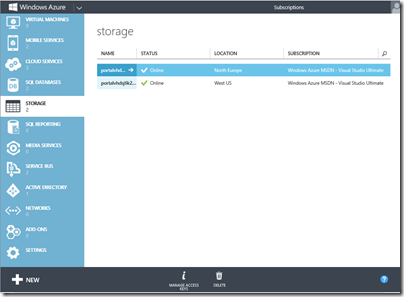

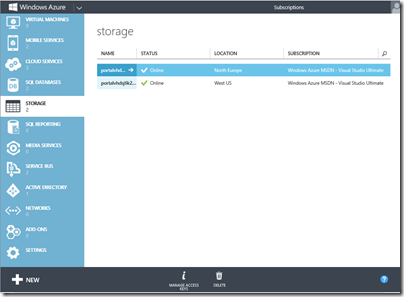

If you want to know what Cloud OS looks like, it is something like this:

Yes, it’s the Azure portal, and one of today’s big announcements is that this is the future of System Center, Microsoft’s on-premise cloud management system, as well as Azure, the public cloud. Azure technology is coming to System Center 2012 R2 via an add-on called the Azure Pack. Self-service VMs, web sites, SQL databases, service bus messaging, virtual networks, online storage and more.

Snover also talked about another aspect to Cloud OS, which is also significant. He says that Microsoft sees cloud as an “operating system problem.” This is the key to how Microsoft thinks it can survive and prosper versus VMWare, Amazon and so on. It has a hold of the whole stack, from the tiniest detail of the operating system (memory management, file system, low-level networking and so on) to the highest level, big Azure datacenters.

The company is also unusual in its commitment to private, public and hybrid cloud. The three cloud story which Microsoft re-iterated obsessively during the briefing is public cloud (Azure), private cloud (System Center) and hosted cloud (service providers). Ideally all three will look the same and work the same – differences of scale aside – though the Azure Pack is only the first stage towards convergence. Hyper-V is the common building block, and we were assured that Hyper-V in Azure is exactly the same as Hyper-V in Windows Server, from 2012 onwards.

I had not realised until this month that Snover is now lead architect for System Center as well as Windows Server. Without both roles, of course, he could scarcely architect “Cloud OS”.

Here are a few other things to note.

Hyper-V 2012 R2 has some great improvements:

- Generation 2 VMs (64-bit Server 2012 and Windows 8 and higher only) strip out legacy emulation, UEIF boot from SCSI

- Replica supports a range of intervals from 30 seconds to 15 minutes

- Data compression can double the speed of live migration

- Live VM cloning lets you copy a running VM for troubleshooting offline

- Online VHDX resize – grow or shrink

- Linux now supports Live Migration, Live Backup, Dynamic memory, online VHDX resize

SQL Server 14 includes in-memory optimization, code-name Hekaton, that can deliver stunning speed improvements. There is also compilation of stored procedures to native code, subject to some limitations. The snag with Hekaton? Your data has to fit in RAM.

Like Generation 2 VMs, Hekaton is the result of re-thinking a product in the light of technical advances. Old warhorses like SQL Server were designed when RAM was tiny, and everything had to be fetched from disk, modified, written back. Bringing that into RAM as-is is a waste. Hekaton removes the overhead of the the disk/RAM model almost completely, though it does have to write data back to disk when transactions complete. The data structures are entirely different.

PowerShell Desired State Configuration (DSC) is a declarative syntax for defining the state of a server, combined with a provider that knows how to read or apply it. It is work in progress, with limited providers currently, but immensely interesting, if Microsoft can both make it work and stay the course. The reason is that using PowerShell DSC you can automate everything about an application, including how it is deployed.

Remember White Horse? This was a brave but abandoned attempt to model deployment in Visual Studio as part of application development. What if you could not only model it, but deploy it, using the cloud automation and self-service model to create the VMs and configure them as needed? As a side benefit, you could version control your deployment. Linux is way ahead of Windows here, with tools like Puppet and Chef, but the potential is now here. Note that Microsoft told me it has no plans to do this yet but “we like the idea” so watch this space.

Storage improvements. Both data deduplication and Storage Spaces are getting smarter. Deduplication can be used for running VHDs in a VDI deployment, with huge storage saving. Storage Spaces support hybrid pools with SSDs alongside hard drives, hot data automatically moved, and the ability to pin files to the SSD tier.

Server Essentials for small businesses is now a role in Windows Server as well as a separate edition. If you use the role, rather than the edition, you can use the Essentials tools for up to 100 or so users. Unfortunately that will also mean Windows Server CALs; but it is a step forward from the dead-end 25-user limit in the current product. Small Business Server with bundled Exchange is still missed though, and not coming back. More on this separately.

What do I think overall? Snover is a smart guy and if you buy into the three-cloud idea (and most businesses, for better or worse, are not ready for public cloud) then Microsoft’s strategy does make sense.

The downside is that there remains a lot of stuff to deal with if you want to implement Microsoft’s private cloud, and I am not sure whether System Center admins will all welcome the direction towards using Azure tools on-premise, having learned to deal with the existing model.

The server folk at Microsoft have something to brag about though: 9 consecutive quarters of double digit growth. It is quite a contrast with the declining PC market and the angst over Windows 8, leading to another question: long-term, can Microsoft succeed in server but fail in client? Or will (for better or worse) those two curves start moving in the same direction? Informed opinions, as ever, are welcome.