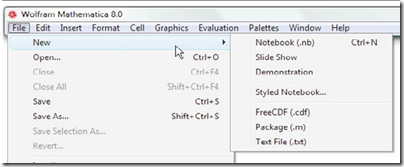

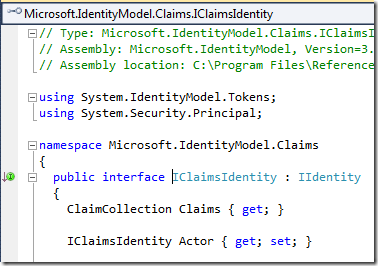

Wolfram has announced the Computable Document Format (CDF), a document format that enables live computation to be embedded within it. “It’s a new way to communicate the world’s quantitative ideas much more richly than we have in the past, and in doing that a new kind of active document,” says Conrad Wolfram, Strategic Director of Wolfram Research. That said, the technology here is not really new. There is a close relationship between CDF and Mathematica, Wollfram’s tool for creating mathematical calculations and simulations. The authoring tool for CDF is Mathematica:

The announcement then is really about a new player for Mathematica content and applications, to broaden their usage. The CDF player is free, though there are some limitations. If you charge for your document, or want to display it without the player chrome, then a paid licence is needed. A CDF document can also be compiled into a standalone executable, blurring the distinction between document and application.

The CDF player is available for Mac, Windows and Linux. There is also a browser plug-in for embedding CDF documents into web pages.

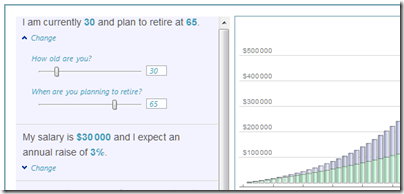

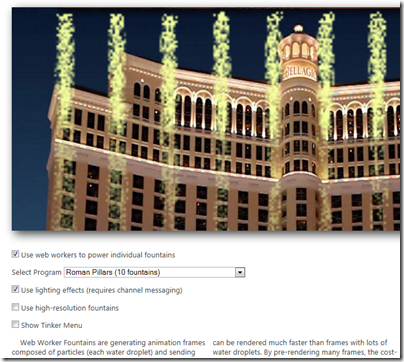

It is easy to find use cases for CDF. It is for documents where there is value in performing calculations or interacting with data within the page. An example is pension planning:

We have all seen those documents with a series of projections based on different assumptions about retirement age, contributions, investment growth and so on. This works better as an interactive chart where you can enter whatever values you like.

Other examples are statistical analysis and business intelligence, textbooks and course books where students can interact with equations and simulations, business proposals where you want to show how financial projections change based on different assumptions, or even general news reports where instead of a static chart you might want to show interactive graphics that let readers drill down into the data that interests them, or see real-time results.

Along with the computation engine, CDF supports a decent range of traditional content formatting features including cascading stylesheets.

Wolfram is correct in assuming that this kind of interactive document is important, and something we will increasingly take for granted in the era of the Web, eBooks and tablets. But can it succeed in establishing its own new document format when we already have HTML, Adobe PDF and Flash, Microsoft Excel and PowerPoint, and other formats which are also capable of embedded interactive content?

That is a key question. Wolfram offers a table which claims to show the benefits of CDF versus competitors such as HTML and PDF, but it is as skewed as these tables usually are. Wolfram says a PDF document cannot be compiled as a standalone executable, for example, but a PDF in an Adobe AIR application comes close. It is also worth noting that you can embed Flash in PDF, which would be an obvious route to something like the pension planning document mentioned above.

Nevertheless, CDF does have advantages. In particular, it has Mathematica, and whereas authoring a Flash applet requires programming and design skills, Mathematica is more approachable presuming you have the necessary mathematical, scientific or financial skills; and if you do not, you should not be authoring the document. Mathematica will construct a user interface automatically. It also has a huge range of built-in algorithms, functions and charts. Wolfram claims that authoring a CDF should be within reach of anyone who can work with an Excel macro.

The challenge Wolfram faces is how to make CDF usable across a broad range of devices and clients. Having to install a player or plug-in is a considerable deterrent. PDF or better still HTML5 has broader reach and works on Google Android and Apple iOS as well as on desktop PCs.

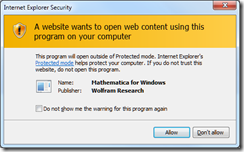

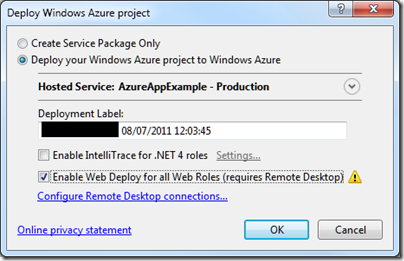

I tried the CDF plugin and player on Windows 7 and encountered several issues. The plug-in does not play nicely with Internet Explorer’s Protected mode and I saw this dialog frequently:

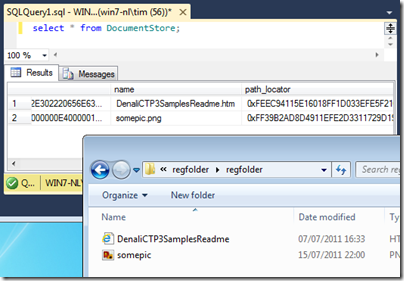

I also had some issues with the player. I could not get an example document on Gulf Oil Spill Estimation to work:

The player is currently for Windows, Mac and Linux – what about Apple iOS? Wolfram says it is working on this, with a two-pronged approach. One idea is presumably based on some sort of app, I’d guess either a player if Apple allows it, or some way to compile a CDF into an app. The other idea is to render the interactive parts server-side, so you could use them in a web page without a plug-in. This second idea could also remove the need for a plug-in on the desktop. You will get a performance hit because of all those trips back and forth to the server, but this could be mitigated by high performance computing on the server that will perform calculations more quickly than your client.

I can see CDF being popular within its niche, but whether it can transition into being a mass-market format I am not sure. Established plug-ins and runtimes such as Adobe Flash, Microsoft Silverlight, and Java on the client are all under pressure, particularly as Apple’s iOS spreads its reach; it is not a good moment to launch a new format that has a plug-in or runtime dependency. I wonder if Wolfram is exploring the possibility of compilation to HTML5 and JavaScript?

Despite these reservations, the broader vision behind CDF seems to me spot-on. There are many cases where we currently see static charts, that would be better served by an embedded computation engine.