I am reading the excellent book Continuous Delivery by Jez Humble and David Farley. But what is Continuous Delivery and how does it differ from the other “continuous” development methodologies?

It helps to understand that all these methodologies spring from the Agile software development movement, and the expression Continuous Delivery is a quote from the Agile Manifesto:

Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.

Now, the starting assumption is that most software projects integrate a number of smaller projects, whether from third-parties or from team members. Since these pieces are developed to some extent independently there is a risk that changes made to one piece will require modifications to another piece; hence according to Humble and Farley:

Most software developed by large teams spends a significant proportion of its development time in an unusable state.

The business of getting all the parts to work together is called integration, and if this involves serious work you need to have an integration phase where this is the sole objective. This is a bad idea for all sorts of reasons, slowing development and preventing proper testing other than at the end of these integration phases.

The solution is called Continuous Integration (CI). You have a frequent automated build that assembles all the pieces from all the teams into a working application. If the build fails, or if automated tests run against the build fail, then this is a bug that should be fixed immediately, not later in some separate phase of development.

Tools for CI include Cruise Control, Hudson, TeamCity and others; .NET developers can also configure Visual Studio Team Foundation Server for CI.

The problem with CI alone is that the development environment is not the same as the production environment. What if the CI build works and tests pass, but once deployed the application breaks or performs badly? Perhaps the development environment runs a multi-tier application with all the tiers on a single box, but when deployed onto actual multiple machines or VMs, something goes wrong. Permission problems are another common source of errors.

Continuous Delivery means that you not only build the software, but also deploy it frequently. This usually means provisioning servers, which you can automate using a tool like Puppet for Unix-like servers, or with Virtual Lab Management in a Visual Studio environment. Automation is pretty much essential for this to work. The more closely the test environment matches the production environment, the better.

Generally though, Continuous Delivery means deployment to a test environment. What about taking the next step, and deploying continuously to production? That is the methodology called Continuous Deployment. It sounds risky; but if you have a very extensive and thorough set of automated tests, then the risks are mitigated, especially as the extent of the changes in any one deployment is reduced.

Other suggestions for reducing risk include deploying to a small subset of users first, called “canary testing”; and making rollback easy.

That said, to judge by the Humble/Farley book the distinction between Continuous Delivery and Continuous Deployment is just a little blurred. The authors acknowledge that continuous deployment into production is not always a good idea. They also imply that Continuous Deployment might mean only that your application is always ready and easy to deploy into production, not that you necessarily deploy it constantly:

Your implementation should make it possible to deploy any version of your application that has made it past the automated tests into any of your environments at the push of a button, given the correct credentials.

Compliance and security are also factors that may rightly make it impossible to automate deployment to production completely.

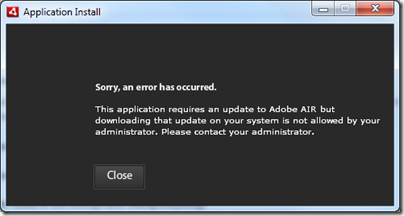

Client-installed applications present some special difficulties which Humble and Farley discuss.

In summary then:

Continuous integration: your application always builds and passes its tests, including all the pieces from different sub-teams.

Continuous delivery: your application always builds and deploys to a test environment and passes its tests.

Continuous deployment: your application is always ready to deploy to production through a largely automated process.

Update: I received an email from Martin Fowler about this post. He refers to Jez Humble’s post on Continuous Delivery vs Continous Deployment and adds:

– I would use your definition of Continuous Deployment for Continuous Delivery

– I would change the definition of Continuous Deployment to say something like "every good build is released to production"

However, I clarified with him that if you building for a test environment but are confident that any build that passes would be OK to deploy to production, then you are still doing Continuous Delivery. In the end, while I am sure you should use Fowler and Humble’s definitions rather than mine, it seems to me a fine distinction and that if you are doing Continuous Delivery properly then the transition to Continuous Deployment is largely a matter of policy.