I am just back from Beijing courtesy of Nvidia; I attended the GPU Technology conference and also got to see not one but two supercomputers: Mole-8.5 in Beijing and Tianhe-1A in Tianjin, a coach ride away.

Mole-8.5 is currently at no. 21 and Tianhe-1A at no. 2 on the top 500 list of the world’s fastest supercomputers.

There was a reason Nvidia took journalists along, of course. Both are powered partly by Nvidia Tesla GPUs, and it is part of the company’s campaign to convince the world that GPUs are essential for supercomputing, because of their greater efficiency than CPUs. Intel says we should wait for its MIC (Many Integrated Core) CPU instead; but Nvidia has a point, and increasing numbers of supercomputers are plugging in thousands of Nvidia GPUs. That does not include the world’s current no. 1, Japan’s K Computer, but it will include the USA’s Titan, currently no. 3, which will add up to 18.000 GPUs in 2012 with plans that may take it to the top spot; we were told that that it aims to be twice as fast as the K Computer.

Supercomputers are important. They excel at processing large amounts of data, so typical applications are climate research, biomedical research, simulations of all kinds used for design and engineering, energy modelling, and so on. These efforts are important to the human race, so you will never catch me saying that supercomputers are esoteric and of no interest to most of us.

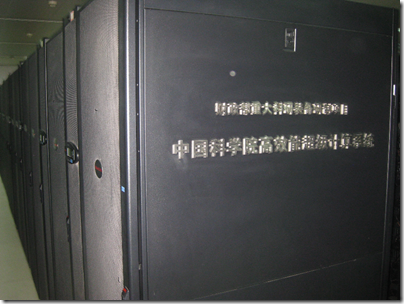

That said, supercomputers are physically little different from any other datacenter: rows of racks. Here is a bit of Mole-8.5:

and here is a bit of Tianhe-1A:

In some ways Tianhe-1A is more striking from outside.

If you are interested in datacenters, how they are cooled, how they are powered, how they are constructed, then you will enjoy a visit to a supercomputer. Otherwise you may find it disappointing, especially given that you can run an application on a supercomputer without any need to be there physically.

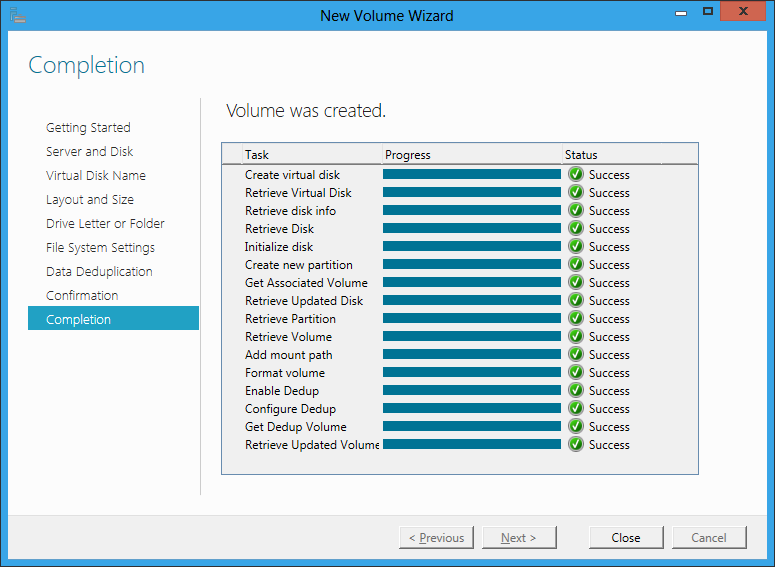

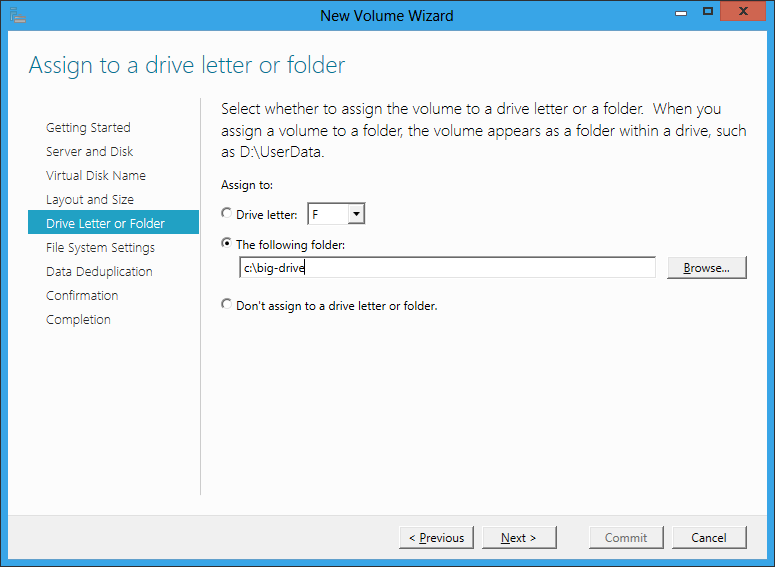

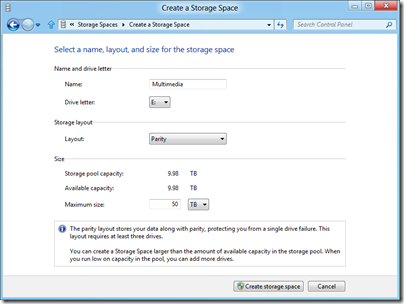

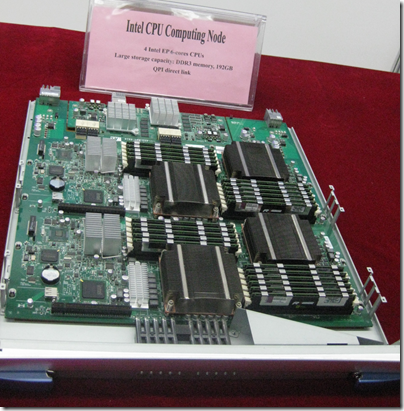

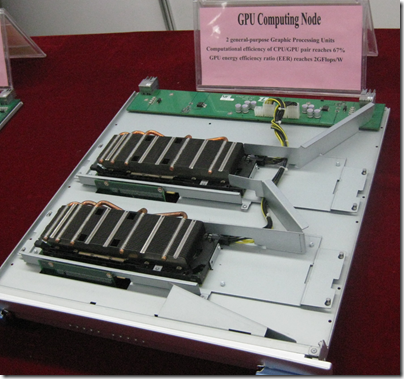

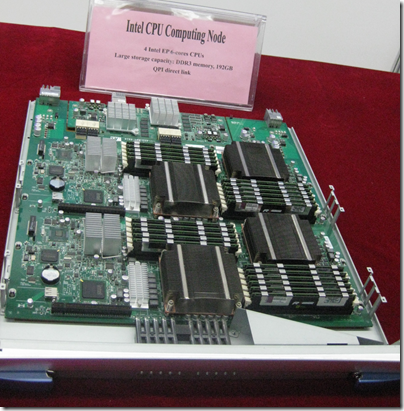

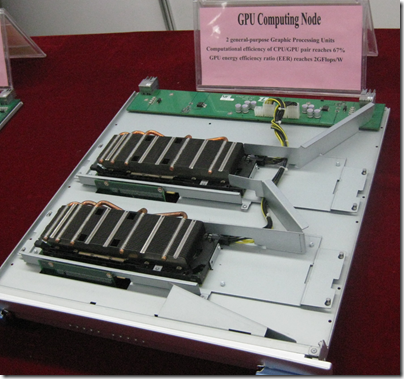

Of course there is still value in going to a supercomputing centre to talk to the people who run it and find out more about how the system is put together. Again though I should warn you that physically a supercomputer is repetitive. They achieve their mighty flop/s (floating point per second) counts by having lots and lots of processors (whether CPU or GPU) running in parallel. You can make a supercomputer faster by adding another cupboard with another set of racks with more boards with CPUs

or GPUs

and provided your design is right you will get more flop/s.

Yes there is more to it than that, and points of interest include the speed of the network, which is critical in order to support high performance, as well as the software that manages it. Take a look at the K Computer’s Tofu Interconnect. But the term “supercomputer” is a little misleading: we are talking about a network of nodes rather than a single amazing monolithic machine.

Personally I enjoyed the tours, though the visit to Tianhe-1A was among the more curious visits I have experienced. We visited along with a bunch of Nvidia executives. The execs sat along one side of a conference table, the Chinese hosts along the other side, and they engaged in a diplomatic exercise of being very polite to each other while the journalists milled around the room.

We did get a tour of Tianhe-1A but unfortunately little chance to talk to the people involved, though we did have a short group interview with the project director, Liu Guangming.

He gave us short, guarded but precise answers, speaking through an interpreter. We asked about funding. “The way things work here is different from how it works in the USA,” he said, “The government supports us a lot, the building and infrastructure, all the machines, are all paid for by the government. The government also pays for the operational cost.” Nevertheless, users are charged for their time on Tianhe-1A, but this is to promote efficiency. “If users pay they use the system more efficiently, that is the reason for the charge,” he said. However, the users also get their funding from the government’s research budget.

Downplayed on the slides, but mentioned here, is the fact that the supercomputer was developed by the “National team of defence technology.” Food for thought.

We also asked about the usage of the GPU nodes as opposed to the CPU nodes, having noticed that many of the applications presented in the briefing were CPU-only. “The GPU stage is somewhat experimental,” he said, though he is “seeing increasing use of the GPU, and such a heterogeneous system should be the future of HPC [High Performance Computing].” Some applications do use the GPU and the results have been good. Overall the system has 60-70% sustained utilisation.

Another key topic: might China develop its own GPU? Tianhe-1A already includes 2048 China-designed “Galaxy FT” CPUs, alongside 14336 Intel CPUs and 7168 NVIDIA GPUS.

We already have the technology, said Guangming.

From 2005 -7 we designed a chip, a stream processor similar to a GPU. But the peak performance was not that good. We tried AMD GPUs, but they do not have EEC [Extended Error Correction], so that is why we went to NVIDIA. China does have the technology to make GPUs. Also the technology is growing, but what we implement is a commercial decision.

Liu Guangming closed with a short speech.

Many of the people from outside China might think that China’s HPC experienced explosive development last year. But China has been involved in HPC for 20 years. Next, the Chinese government is highly committed to HPC. Third, the economy is growing fast and we see the demand for HPC. These factors have produced the explosive growth you witnessed.

The Tianjin Supercomputer is open and you are welcome to visit.