Adobe has announced that Flash 11 and AIR 3 will ship in early October.

There are significant changes in this release.

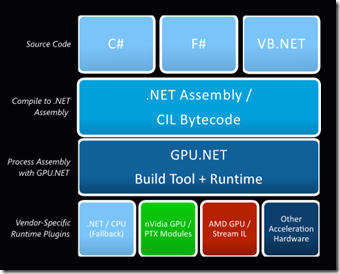

- Flash gets Stage 3D (previously codenamed Molehill), a set of low-level 3D APIs, GPU accelerated where hardware allows, which will make console-like 3D graphics and games possible in Flash. Stage 3D wraps DirectX on Windows and OpenGL on desktop and mobile platforms.

- 64-bit Flash is here at last, supporting 64-bit Internet Explorer and other browses on Windows, Mac and Linux.

- AIR, which uses Flash as a runtime for desktop and mobile applications, now supports native extensions for better device support, operating system integration, and the ability to speed performance-critical code or use open source libraries.

- In addition, the AIR packager for iOS, which lets you wrap your application as a native executable, is now a feature called Captive Runtime which is available for Windows, Mac and Android as well as iOS. Users who install a packaged application will not know it uses AIR, and will not need to install or update the AIR runtime as it is packaged with the application, though it is not actually a single file (on Windows at least).

These new options make the Flash and AIR combination an interesting comparison with other cross-platform development tools, such as Embarcadero’s new Delphi XE2, which targets Windows, Mac and iOS with a new framework called FireMonkey; or Appcelerator’s Titanium tool for cross-platform desktop and mobile development. Note though that Adobe is not promising any performance improvement. This is just another way to package the same runtime.

Adobe’s advantage is its high quality design and development tools and the maturity of the Flash runtime. For application size and performance, it will likely fall short of true native development tools. The ActionScript language could do with updating, and I would not be surprised if Adobe addresses this in the next major Flash release.

But do we still need Flash? Flash in the browser is in decline, thanks to the influence of Apple and the rise of HTML 5. Adobe’s MAX conference is coming up soon, and I noticed in the schedule [Flash needed] a defensive note in some of the sessions; there is even one called “The Death of Flash” which talks about “the misinformation that’s percolated through the web over the past year”.

That may be so; but even Adobe is re-positioning Flash and recognizing the rise of HTML 5. “Customers see significant advantages for Flash in a few focused areas,” said Adobe’s Danny Winokur, VP and General Manager of Platform , in a press briefing. He identified these areas as gaming, media apps, and “sophisticated data-driven applications” – think data visualisation rather than just forms over data. “For everything else it is very clear that … HTML 5 is a mature enough technology that it is a really good solution.”

Adobe is therefore investing in HTML 5 tools as well as Flash tools, and Winokur mentioned the Edge motion design tool as well as the venerable Dreamweaver.

I asked Winokur, given that HTML 5 is maturing fast, how Adobe sees the picture vs Flash in say two years time. He replied that Adobe is actively working to advance HTML 5, but that “there will continue to be opportunities for innovation in Flash, where we can … enable new possibilities that did not previously exist on the Web.” He makes the case for Flash as a kind of leading edge for HTML, with features that eventually become part of the HTML standard.

It is a fair point, but it is obvious that the niche for Flash is getting smaller rather than larger.

Adobe has never charged for the Flash runtime, and while the Flash vs HTML path is tricky to navigate, Adobe mainly makes its money from design tools, server applications and web analytics, and while Flash plays some client role in many of these products, Adobe can tune them over time to make less use of the runtime. I believe we can see this happening.

More positively, Adobe is benefiting from the demand for rich content across both web and applications, and has just reported decent financial results, showing the company’s resilience.

Finally, everyone is asking what Adobe will do about Microsoft’s WIndows 8 Metro platform for tablets, given that browser plug-ins are not supported. Here is the answer:

… we expect Flash based apps will come to Metro via Adobe AIR, much the way they are on Android, iOS and BlackBerry Tablet OS today

though I hope this will be delivered more quickly than the promised Flash runtime for Windows Phone 7, which is not a subject either Adobe or Microsoft seems willing to talk about.

Update: Adobe has also announced the Flex 4.6 SDK and Flash Builder 4.6, which supports these new capabilities including Captive Runtime and Native Extensions, and has new controls specifically aimed at tablet apps.