I’m just back from Microsoft’s BUILD conference at Anaheim in California, which lived up to the hype as a key moment of transition for the company. Some said it was the most significant PDC – yes, it was really the Professional Developers Conference renamed – since 2000, when .NET was introduced; some said the most significant ever.

“Significant” does not necessarily mean successful, and history will judge whether BUILD 2011 was a new dawn or the beginning of the end for Windows. Nevertheless, I have not heard so much cheering and whooping at a Microsoft conference for a while, and although I am no fan of cheering and whooping I recognise that there was genuine enthusiasm there for the new direction that was unveiled.

So what happened? First, let me mention the Windows Server 8 preview, which looks a solid upgrade to Server 2008 with a hugely improved Hyper-V virtualisation and lots of changes in storage, in IIS, networking, in data de-duplication, in modularisation (enabling seamless transition between Server Core and full Server) and in management, with the ascent of PowerShell scripting and recognition that logging onto a GUI on the server itself is poor practice.

The server team are not suffering the same angst as the client team in terms of direction, though the company has some tricky positioning to do with respect to Azure (platform) and Server 8 (infrastructure) cloud computing, and how much Microsoft hosts in its own datacentres and how much it leaves to partners.

What about Windows client? This is the interesting one, and you can almost hear the discussions among Microsoft execs that led them to create the Windows Runtime and Metro-style apps. There is the Apple iPad; there is cloud; there are smartphones; and Windows looks increasingly like a big, ponderous, legacy operating system with its dependence on keyboard and mouse (or stylus), security issues, and role as a fat client when the industry is moving slowly towards a cloud-plus-device model.

At the same time Windows and Office form a legacy that Microsoft cannot abandon, deeply embedded in the business world and the source of most of the company’s profits. The stage is set for slow decline, though if nothing else BUILD demonstrates that Microsoft is aware of this and making its move to escape that fate.

Its answer is a new platform based on the touch-friendly Metro UI derived from Windows Phone 7, and a new high-performance native code runtime, called Windows Runtime or WinRT. Forget Silverlight or WPF (Windows Presentation Foundation); this is a new platform in which .NET is optional, and which is friendly to all of C#, C/C++, and HTML5/JavaScript. That said, WinRT is a locked-down platform which puts safety and lock-in to Microsoft’s forthcoming Windows Store ahead of developer freedom, especially (and I am speculating a little) in the ARM configuration of which we heard little at BUILD.

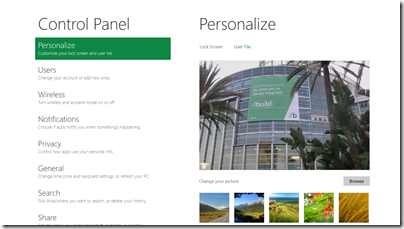

BUILD attendees were given a high-end Samsung tablet with Windows 8 pre-installed, and in general the Metro-style UI was a delight, responsive and easy to use, and with some fun example apps, though many of the apps that will come as standard were missing and there was evidence of pre-beta roughness and instability in places.

The client strategy seems to me to look like this:

Windows desktop will trundle on, with a few improvements in areas like boot time, client Hyper-V, and the impressive Windows To Go that runs Windows from a bootable and bitlocker-encrypted USB stick leaving no footprint on the PC itself. Many Windows 8 users will spend all their time in the desktop, and I suspect Microsoft will be under pressure to allow users to stick with the old Start menu if they have no desire or need to see the new Metro-style side of Windows..

A new breed of Intel tablets and touch-screen notebooks will make great devices towards the high end of mobile computing. This is something I look forward to taking with me when I need to work on the road: Metro-style apps for when you are squashed in an aeroplane seat, browsing the web or checking a map, but full Windows only a tap away. These will be useful but slightly odd hybrids, and will tend to be expensive, especially as you will want a keyboard and stylus or trackpad for working in desktop Windows. They will not compete effectively with the iPad or Android tablets, being heavier, with shorter battery life, more expensive and less secure. They may compete well with Mac notebooks, depending on how much value Metro adds for business users mainly focused on desktop applications.

Windows on ARM, which will be mainly for Metro-style apps and priced to compete with other media tablets. This is where Microsoft is being vague, but we definitely heard at BUILD that only Metro-style apps will be available from the Windows Store for ARM, and even hints that there may be no way to install desktop apps. I suspect that Microsoft would like to get rid of desktop Windows on ARM, but that it will be too difficult to achieve that in the first iteration. One unknown factor is Office. It is obvious that Microsoft cannot rework full Office for Metro by this time next year; yet offering desktop Office will be uncomfortable and (knowing Microsoft) expensive on a lightweight, Metro-centric ARM device. Equally, not offering Office might be perceived as throwing away a key advantage of Windows.

Either way, Windows on ARM looks like Microsoft’s iPad competitor, safe, cloud-oriented, inexpensive, long battery life, and lots of fun and delightful apps, if developers rush to the platform in the way Microsoft hopes.

There are several risks for Microsoft here. OEM partners may cheapen the user experience with design flaws and low-quality add-ons. Developers may prove reluctant to invest in an unproven new platform. It may be hard to get the price down low enough, bearing in mind Apple’s advantage with enormous volume purchasing of components for iPad.

Still, one clever aspect of Microsoft’s strategy is that everyone with Windows 8 will have Metro, which means there will be a large installed base even if many of those users only really want desktop Windows.

I also wonder if this is an opportunity for Nokia, to use its hardware expertise to deliver excellent devices for Windows on ARM.

Finally, let me mention a few other BUILD highlights. Anders Hejlsberg spoke on C# and VB futures (though I note that there were few VB developers at BUILD) and I was impressed both by the new asynchronous programming support and the forthcoming compiler API which will enable some amazing new capabilities.

I also enjoyed Don Syme’s session on F#, where he focused on programming information rather than mere algorithms, and showed how the language can query internet data sources with IntelliSense and code hints in the IDE, inferred from schemas retrieved dynamically. You really need to watch his session to understand what this means.

In the end this was a great conference, with an abundance of innovation and though-provoking technology. In saying that, I do not mean to understate the challenges this huge company still faces.