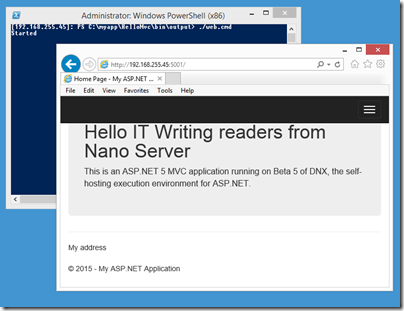

I have been trying out Microsoft’s Nano Server Preview and wrote up initial experiences for the Register. One of the things I mentioned is that I could not get an ASP.NET app successfully deployed. After a bit more effort, and help from a member of the team, I am glad to say that I have been successful.

What was the problem? First, a bit of background. Nano Server does not run the .NET Framework, presumably because it has too many dependencies on pieces of Windows which Microsoft wanted to omit from this cut-down deployment. Nano Server does support .NET Core, also known as Core CLR, which is the open source fork of the .NET Framework. This enables it to run PowerShell, although with a limited range of cmdlets, and my main two ways of interacting with Nano Server are with PowerShell remoting, and Windows file sharing for copying files across.

On your development machine, you need several pieces in order to code for ASP.NET 5.0. Just installing Visual Studio 2015 RC will do, except that there is currently an incompatibility between the version of the ASP.NET 5.0 .NET Core runtime shipped with Visual Studio, and what works on Nano Server. This meant that my first effort, which was to build an empty ASP.NET 5.0 template app and publish it to the file system, failed on Nano Server with a NativeCommandError.

This meant I had to dig a bit more deeply into ASP.NET 5.0 running on .NET Core. Note that when you deploy one of these apps, you can include all the dependencies in the app directory. In other words, apps are self-hosting. The binary that enables this bit of magic is called DNX (.NET Execution Environment); it was formerly known as the K runtime.

Developers need to install the DNX SDK on their machines (Windows, Mac or Linux). There is currently a getting started guide here, though note that many of the topics in this promising documentation are as yet unwritten.

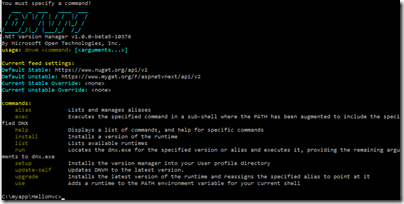

However, after installation you will be able to use several handy commands:

dnvm This is the .NET Version manager. You can have several versions of the DNX runtime installed and this utility lets you list them, set aliases to save typing full paths, and manage defaults.

dnu This is the .NET Development Utility (formerly kpm) that builds and publishes .NET Core projects. The two commands I found myself using regularly are dnu restore which downloads Nuget (.NET repository) packages and dnu publish which packages an app for deployment. Once published, you will find .cmd files in the output which you use to start the app.

dnx This is the binary which you call to run an app. On the development machine, you can use dnx . run to run the console app in the current directory and dnx . web to run the web app in the current directory.

Now, back to my deployment issues. The Visual Studio templates are all hooked to DNX beta 4, and I was informed that I needed DNX beta 5 for Nano Server. I played around with trying to get Visual Studio to target the updated DNX but ran into problems so decided to ignore Visual Studio and do everything from the command line. This should mean that it would all work on Mac and Linux as well.

I had a bit of trouble persuading DNX to update itself to the latest unstable builds; the main issue I recall is targeting the correct repository. You NuGet sources must include (currently) https://www.myget.org/F/aspnetvnext/api/v2.

Since I was not using Visual Studio, I based my samples on these, Hello World Console, MVC and Web apps that you can use for testing that everything works. My technique was to test on the development machine using dnx . web, then to use dnu publish and copy the output to Nano Server where I could run ./web.cmd in a remote PowerShell session.

Note that I found it necessary to specify the CoreClr 64-bit runtime in order to get dnu to publish the correct files. I tried to make this the default but for some reason* it reverted itself to x86:

dnu publish –runtime "c:\users\[USERNAME]\.dnx\runtime\dnx-coreclr-win-x64.1.0.0-beta5-11701"

Of course the exact runtime version to use will change soon.

If you run this command and look in the /bin/output folder you will find web.cmd, and running this should start the app. The port on which the app listens is set in project.json in the top level directory of the project source. I set this to 5001, opened that port in the Windows Firewall on the Nano Server, and got a started message on the command line. However I still could not browse to the app running on Nano Server; I got a 400 error. Even on the development machine it did not work; the browser just timed out.

It turned out that there were several issues here. On the development machine, which is running Windows 10 build 10074, I discovered to my annoyance that the web app worked fine with Internet Explorer, but not in Project Spartan, sorry Edge. I do not know why.

Support also gave me some tips to get this working on Nano Server. In order for the app to work across the network, you have to edit project.json so that localhost is replaced either with the IP number of the server, or with a *. I was also advised to add dnx.exe to the allowed apps in the firewall, but I do not think this is necessary if the port is open (it is a nuisance, since the location of dnx.exe changes for every app).

Finally I was successful.

Final observations

It seems to me that ASP.NET vNext running on .NET Core has the characteristic of many open source projects, a few dedicated people who have little time for documentation and are so close to the project that their public communications assume a fair amount of pre-knowledge. The site I referenced above does have helpful documentation though, for the few topics that are complete. Some other posts I found helpful are this series by Steve Perkins, and the troubleshooting suggestions here especially David Fowler’s post.

I like The .NET Core initiative overall since I like C# and ASP.NET MVC and now it is becoming a true cross-platform framework. That said, the code does seem to be in rapid flux and I doubt it will really be ready when Visual Studio 2015 ships. The danger I suppose is that developers will try it in the first release, find lots of problems, and never go back.

I also like the idea of running apps in Nano Server, a low-maintenance environment where you can get the isolation of a dedicated server for your app at low cost in terms of resources.

No doubt though, the lack of pieces that you expect to find on Windows Server will be an issue and I am not sure that the mainstream Microsoft developer ecosystem will take to it. Aidan Finn is not convinced, for example:

Am I really expected to deploy a headless OS onto hardware where the HCL certification has the value of a bucket with a hole in it? If I was to deploy Nano, even in cloud-scale installations, then I would need a super-HCL that stress tests all of the hardware enhancements. And I would want ALL of those hardware offloads turned OFF by default so that I can verify functionality for myself, because clearly, neither Microsoft’s HCL testers nor the OEMs are capable of even the most basic test right now.

Finn’s point is that if your headless server is having networking issues it is hard to troubleshoot, since of course remote tools will not work reliably. That said, I have personally run Hyper-V Server (which is essentially Server Core with just the Hyper-V role) with great success for several years; I started keeping notes on how to troubleshoot from the command line and found solutions to common problems. If networking fails with Nano Server then yes, you have a problem, but there is always something you can do, even if it means mounting the Nano Server VHD or VHDX on another VM. Windows Server admins have become accustomed to a local GUI though and adjusting even to Server Core has not been easy.

*the reason was that I did not use the –p argument with dnvm use which would have made it persistent