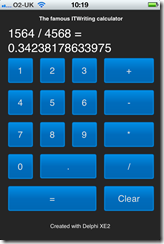

I have been trying out JetBrains’ AppCode which meant working in an Apple development environment for a time. I took the opportunity to implement my simple calculator app in iOS native code.

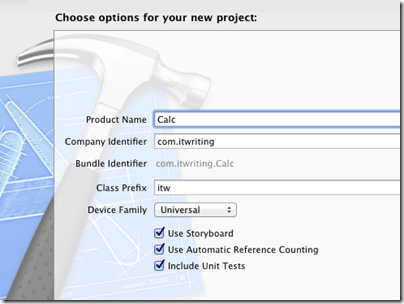

Objective C is a distinctive language with a mixed reputation, but I enjoy coding with it. I used Automatic Reference Counting (ARC), a feature introduced in Xcode 4.2 and OSX 10.7, iOS 5; ARC now also works with 10.6 and iOS 4. This means objects are automatically disposed, and I did not have to worry about memory management at all in my simple app. This is not a complete memory management solution (if there is such a thing) – if you use malloc you must use free – but it meant that the code in my app is not particularly verbose or complex compared to other languages. Apple’s libraries seem to favour plain English method names like StringByAppendingString which makes for readable code.

I was impressed by how easy it is to make an app that looks good, because the controls are beautifully designed. I understand the attraction of developing solely for Apple’s platform.

I also love the integrated source control in Xcode. You find yourself using a local Git repository almost without thinking about it. Microsoft could learn from that; no need for Team Foundation Server for a solo developer.

I did miss namespaces. In Objective C, if you want to remove the risk of name collision with a library, you have to use your own class prefix (and hope that nobody else picked the same one).

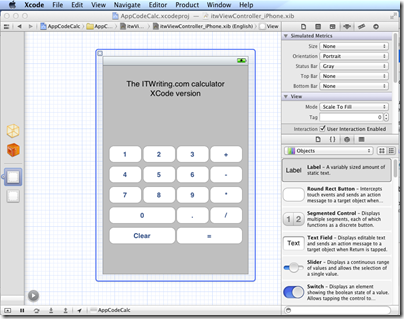

Interface Builder, the visual UI designer, is great but many developers do not use it, because coding the UI without it is more flexible. It is a shame that you have to make this choice, unlike IDE’s with “two way tools” that let you edit in code or visually and seamlessly keep the two in synch. I found myself constantly having to re-display windows like the Attributes Inspector though it is not too bad once you learn the keyboard shortcuts. The latest Interface Builder has a storyboard feature which lets you define several screens and link them. It looks useful, though when I played with this I found it difficult to follow all the linking lines the designer drew for me.

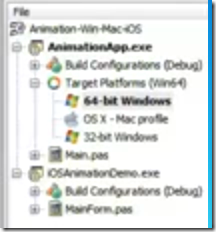

It is interesting to compare the Mac and iOS development platform with that for Windows. Microsoft promotes the idea of language choice, though most professional development is either C# or C++, whereas on Apple’s platform it is Objective C and Cocoa or you are on your own. Although Mac and Windows are of a similar age, Microsoft’s platform gives a GUI developer more choices: Win32, MFC, WTL, Windows Forms, Windows Presentation Foundation and Silverlight, and in Windows 8 the new WinRT.

I get the impression that Microsoft is envious of this single-minded approach and trying to bring it to Metro-style Windows 8, where you still have a choice of languages but really only one GUI framework.

That said, Visual Studio is an impressive tool and both C# and C++ have important features which are lacking in Objective C. I would judge that Visual Studio is the more productive tool overall, but Apple’s developer platform has its own attractions.