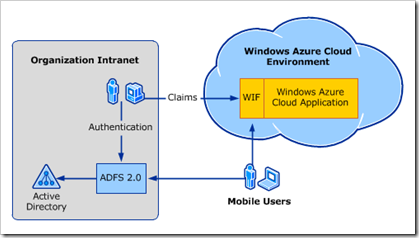

Microsoft has posted a white paper setting out what you need to do in order to have users who are signed on to a local Windows domain seamlessly use an Azure-hosted application, without having to sign in again.

I think this is a huge feature. Maintaining a single user directory is more secure and more robust than efforts to synchronise a local directory with a cloud-hosted directory, and this is a point of friction when it comes to adopting services such as Google Apps or Salesforce.com. Single sign-on with federated directory services takes that away. As an application developer, you can write code that looks the same as it would for a locally deployed application, but host it on Azure.

There is also a usability issue. Users hate having to sign in multiple times, and hate it even more if they have to maintain separate username/password combinations for different applications (though we all do).

The white paper explains how to use Active Directory Federation Services (ADFS) and Windows Identity Foundation (WIF, part of the .NET Framework) to achieve both single sign-on and access to user data across local network and cloud.

The snag? It is a complex process. The white paper has a walk-through, though to complete it you also need this guide on setting up ADFS and WIF. There are numerous steps, some of which are not obvious. Did you know that “.NET 4.0 has new behavior that, by default, will cause an error condition on a page request that contains a WS-Federation authentication token”?

Of course dealing with complexity is part of the job of a developer or system administrator. Then again, complexity also means more to remember and more to troubleshoot, and less incentive to try it out.

One of the reasons I am enthusiastic about Windows Small Business Server Essentials (codename Aurora) is that it promises to do single sign-on to the cloud in a truly user-friendly manner. According to a briefing I had from SBS technical product manager Michael Leworthy, cloud application vendors will supply “cloud integration modules,” connectors that you install into your SBS to get instant single sign-on integration.

SBS Essentials does run ADFS under the covers, but you will not need a 35-page guide to get it working, or so we are promised. I admit, I have not been able to test this feature yet, and aside from Microsoft’s BPOS/Office 365 I do not know how many online applications will support it.

Still, this is the kind of thing that will get single sign-on with Active Directory widely adopted.

Consider FaceBook Connect. Register your app with Facebook; write a few lines of JavaScript and PHP; and you can achieve the same results: single sign-on and access to user account information. Facebook knows that to get wide adoption for its identity platform it has to be easy to implement.

On Microsoft’s platform, another option is to join your Azure instance to the local domain. This is a feature of Azure Connect, currently in beta.

Are you using ADFS, with Azure or another platform? I would be interested to hear how it is going.