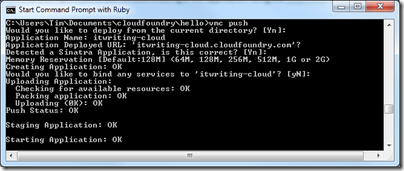

Yesterday Microsoft announced Windows Azure SDK for PHP version 3.0, an update to its open source SDK for PHP on Windows Azure. The SDK wraps Azure storage, diagnostics and management services with a PHP API.

Microsoft has been working for years on making IIS a good platform for PHP. FastCGI for IIS was introduced partly, I guess, with PHP in mind; and Microsoft runs a dedicated site for PHP on IIS. The Web Platform Installer installs a number of PHP applications including WordPress, Joomla and Drupal.

It is good to see Microsoft making an effort to support this important open source platform, and I am sure it has been welcomed by Microsoft-platform organisations who want to run WordPress, say, on their existing infrastructure.

Attracting PHP developers to Azure may be harder though. I asked Nick Hines, CTO for Innovation at Thoughtworks, a global IT consultancy and developer, what he thought of the idea.

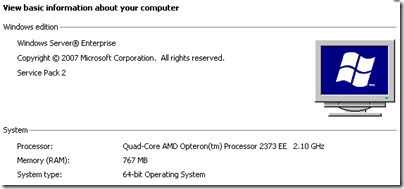

I’d struggle to see any reason. Even if you had it in your datacentre, I certainly wouldn’t advise a client, unless there was some corporate mandate to the contrary, and especially if they wanted scale, to be running a Java or a PHP application on Windows.

Microsoft’s scaling and availability story around windows hasn’t had the penetration of the datacentre that Java and Linux has. If you look at some of the heavy users of all kinds of technology that we come across , such as some of the investment banks, what they’re tending to do is to build front and middle tier applications using C# and taking advantage of things like Silverlight to get the fancy front ends that they want, but the back end services and heavy lifting and number crunching predominantly is Java or some sort of Java variant running on Linux.

Hine also said that he had not realised running PHP on Azure was something Microsoft was promoting, and voiced his suspicion that PHP would be at a disadvantage to C# and .NET when it came to calling Azure APIs.

His remarks do not surprise me, and Microsoft will have to work hard to persuade a broad range of customers that Azure is as good a platform for PHP as Linux and Apache – even leaving aside the question of whether that is the case.

The new PHP SDK is on Codeplex and developed partly by a third-party, ReadDolmen, sponsored by Microsoft. While I understand why Microsoft is using a third-party, this kind of approach troubles me in that you have to ask, what will happen to the project if Microsoft stops sponsoring it? It is not an organic open source project driven by its users, and there are examples of similar exercises that have turned out to be more to do with PR than with real commitment.

I was trying to think of important open source projects from Microsoft and the best I could come up with is ASP.NET MVC. This is also made available on CodePlex, and is clearly a critical and popular project.

However the two are not really comparable. The SDK for PHP is licensed under the New BSD License; whereas ASP.NET MVC has the restrictive Microsoft Source License for ASP.NET Pre-Release Components (even though it is now RTM – Released to manufacturing). ASP.NET MVC 1.0 was licensed under the Microsoft Public License, but I do not know if this will eventually also be the case for ASP.NET MVC 3.0.

Further, ASP.NET MVC is developed by Microsoft itself, and has its own web site as part of the official ASP.NET site. Many users may not realise that the source is published.

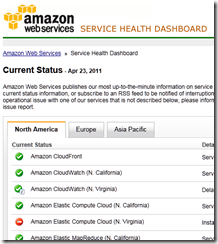

My reasoning, then, is that if Microsoft really want to make PHP a first-class citizen on Azure, it should hire a crack PHP team and develop its own supporting libraries; as well as coming up with some solid evidence for its merits versus, say, Linux on Amazon EC2, that might persuade someone like Nick Hine that it is worth a look.