Today I assisted a (very) small company migrate from Small Business Server 2003 to BPOS (Business Productivity Online Suite), Microsoft’s hosted Exchange and SharePoint.

Why BPOS, when Office 365 launches later this month? Well, BPOS has all the features they need, and when given the choice between a beta-soon-to-be-just-launched online platform, and one that has been around for a few years, they chose the latter, which is reasonable. Long term it will make no difference, because BPOS users will be migrated to Office 365. It was interesting to me, since I am reviewing Office 365 and this migration gave me good insight into the differences.

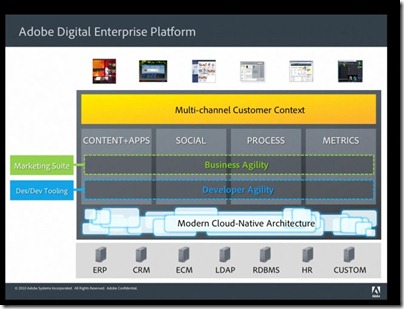

Aside: the fact that this is a choice says something about Microsoft. One of the advantages of cloud computing is that improvements can be continuous and incremental, since the software is paid for by subscription rather than through a version upgrade cycle. There is only one Salesforce.com platform; there is only one Google Apps platform. Will there be an Office 720 in two to three years time, or will Microsoft have worked this out by then? It will be hard, because no doubt there are teams working on Exchange 2013 and SharePoint 2013 and these will be delivered as on-premise product upgrades. This also implies that the new features in these products will not be considered ready until the on-premise products go gold; which means that cloud customers have to wait a long time for major enhancements. Changing this cycle will require a profound shift in the way the company functions.

Now a few comments about the process. Overall it was pretty good, and took less time than it normally takes to migrate from one version of Small Business Server to the next. There are annoyances though, beginning with the migration tools. The challenge is that you want to move mailboxes from SBS Exchange to online Exchange without losing any email.

Email coexistence

The basic approach is this:

1. A directory synchronisation tool copies user accounts to BPOS and keeps them in synch with on-premise Active Directory.

2. A mailbox migration tool copies mailboxes to BPOS and sets up forwarding, so email arriving into on-premise Exchange is forwarded to BPOS.

This is known as email co-existence, because users can log on to either on-premise Exchange or BPOS, and still be able to send and receive mail. Clever stuff, and it does make migration nice and smooth.

The first annoyance: the directory synchronization tool must be installed on a 32-bit Windows Server that is joined to the domain but not a domain controller. Many SBS setups do not have such a thing. In this case, I ran up Virtual PC on Windows 7 64-bit, installed 32-bit Server 2003, joined it to the domain (actually over a VPN), and ran the tool from there.

Actual mailbox migration uses a separate tool which fortunately does run on the SBS server itself. One the users are in place and enabled on BPOS, you run this tool to upload the mailboxes. This takes a while, since you are uploading what is probably several Gb of data. I left this running overnight, but it was only partially successful. Two mailboxes did not upload properly and had to be redone, which was a bit untidy because in one case some folders were duplicated. Fortunately it was not hard to clean up.

Once the mailboxes are migrated, you simply install and run Microsoft’s sign-in utility on each client PC. This automatically configures Outlook with a new BPOS profile, leaving the old profile in place in case of mishaps.

The last step is to change the DNS records so that mail is actually delivered to BPOS rather than to on-premise Exchange.

SharePoint migration

This particular company is reliant on SharePoint for document sharing. Although it is SBS 2003, they have SharePoint Services 3.0 installed; it can be done if you are careful.

Major annoyance: the BPOS documentation is silent on the subject of migrating content. There is a heading in the Migration Help for SharePoint Online; but it does not cover migration from on-premise to BPOS SharePoint at all. There are third-party tools that do this though, and some help from the community.

Of course there are multiple ways to move SharePoint content, though in some cases you will lose version information. I found this article helpful. I was able to start from Step 5, since it was already a SharePoint 3.0 site. Look how clear and concise the steps are; a refreshing contrast to Microsoft’s verbose efforts with seemingly endless sections for overviews, planning and deployment, that take ages to get to the point and still manage to omit key information.

I read the post and the comments, then created a blank site in BPOS. I backed up and then exported the existing site to CMP files, and kept it locked so that no new content would be added. Then I installed SharePoint Designer 2007, which is free, logged into the BPOS site and restored the site.

All the important things restored correctly. Unfortunately the permissions do not migrate, because the BPOS domain is different from the SBS domain; the active directory is only synchronized. I had to fix this up by deleting dud users and groups from the new site and adding groups and users for BPOS. I also added a few web parts to the otherwise blank home page. Nevertheless, considering how painful SharePoint migrations can be this one was pretty good.

I understand though that this simple approach does not always work. I would guess that the more SharePoint is customised, the more likely you are to have problems, which is probably why there is no official tool.

Exchange issues

There were a couple of issues with Exchange. The first was public folders, which are not supported in BPOS. The solution is to use SharePoint lists. Here is how it goes:

- Open an on-premise Outlook profile with full access to the public folders. Export each public folder to an Outlook .pst file – the best format for preserving all the data.

- Go to SharePoint online and create a new list of the appropriate type. For example, for tasks you need a task list, for contacts a contact list.

- Open Outlook with a BPOS profile. In SharePoint online, go to the target list and choose Connect to Outlook from the Actions menu.

- The SharePoint list now exists in Outlook. Open the .pst and copy the items exported from the public folder to the new list.

- Other users need only connect to the SharePoint list. The magic of synchronization copies the content.

Another issue is mailbox permissions. If you have users who want access to another user’s mailbox, you have to set permissions on the target mailbox to allow this. These permissions do not get migrated automatically. To do this, you have to use PowerShell. This article explains. The easiest route to a correctly configured PowerShell is to use the shortcut to the Migration Command Shell which is installed with the Microsoft Online migration tools.

A note on cost

BPOS costs $10 per user per month, with a minimum of 5 users. In the UK this is £6.72, so from £33.60 per month. Along with Exchange and SharePoint, you get Office Live Meeting (Web Conferencing) and Office Communications Online (Enterprise Live Messenger).

A typical SBS server lasts 3 to 5 years before it has to be replaced. Taking the shorter time for example, BPOS will cost £1209.60 in subscription costs over the lifetime of a physical server.

It seems obvious that if Exchange and SharePoint is all you need, and if you are happy with the implications of the cloud approach, BPOS works out cheaper. Of course the pricing will change, but Office 365 is actually coming out at a similar price for the equivalent features. Yes, you could buy a basic server with SBS for £1209, but that is just the start: installation, firewall, backup and maintenance all add to the cost.

That said, most businesses will still need some kind of on-premise server, even if it is no more than a simple NAS (Network Attached Storage) box. Another real-world problem is that there may be server-based applications which cannot easily be abandoned. If you find you have to run an on-premise server anyway, adding BPOS on top looks less attractive.

There is much more to say about cloud vs on-premise, but it is worth noting that it can be cost-effective.