I am at the Cloud Computing World Forum in London where one of the highlights was a keynote yesterday from Amazon CTO Werner Vogels. Amazon, oddly enough, does not have a stand here; yet the company dominates the IAAS (Infrastructure as a service) market and has moved beyond that into more PAAS (Platform as a service) type services.

Vogels said that the reason for Amazon’s innovation in web services was its low margin business model. This was why, he said, none of the established enterprise software companies had been able to innovate in the same way.

He added that Amazon did not try to lock its customers in:

If this doesn’t work for you, you should be able to work away. You should be in charge, not the enterprise software company.

Sounds good; but Amazon has its own web services API and if you build on it there is an element of lock-in. Or is there? I was intrigued by a remark made by Huawei’s Head of Enterprise R&D John Roese at a recent cloud computing seminar:

We think there is an imperative in the industry to settle on standardised interfaces. There should be no more of this rubbish where people think they can differentiate based on proprietary interfaces in the cloud. A lot of suppliers are not very interested in this because they lose the stickiness of the solution, but we will not see massive cloud adoption without that portability.

But what are these standardised interfaces? Roese said that Huawei uses Amazon APIs. For example, the Huawei Cloud Storage Engine:

The CSE boasts a high degree of openness. It supports S3-like interfaces and exposes the internal storage service enabler to 3rd party applications.

There is also Eucalyptus, open source software for private clouds that uses Amazon APIs. Is the Amazon web services API becoming a de-facto standard, and what does Amazon itself think about third parties adopting its API?

I asked Vogels, whose response was not encouraging for the likes of Huawei treating it as a standard. The question I put: is the adoption of Amazon’s API by third parties influencing his company in its maintenance and evolution of those APIs?

It is not influencing us. It is influencing them. Who is adopting our APIs? We licensed Eucalyptus. People ask us about standardisation. I’d rather focus on innovation. If others adopt our APIs, I don’t know, we rather focus on innovation.

The lack of interest in standardisation does undermine Vogels’ comments about the freedom of its customers to walk away, though lock-in is not so bad if your use of public cloud is primarily at the IAAS level (though you may be locked into the applications that run on it).

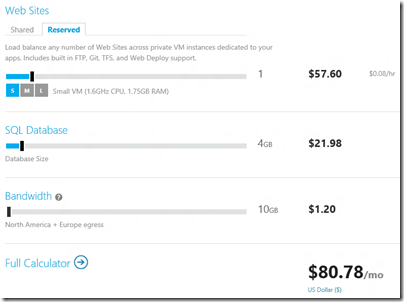

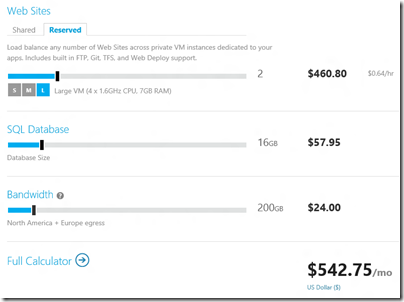

Another mitigating factor is that third parties can wrap cloud management APIs to make one cloud look like another, even if the underlying API is different. Flexiant, which offers cloud orchestration software, told me that it can do this successfully with, for example, Amazon’s API and that of Microsoft Azure. Perhaps, then, standardisation of cloud APIs matters less than it first appears.