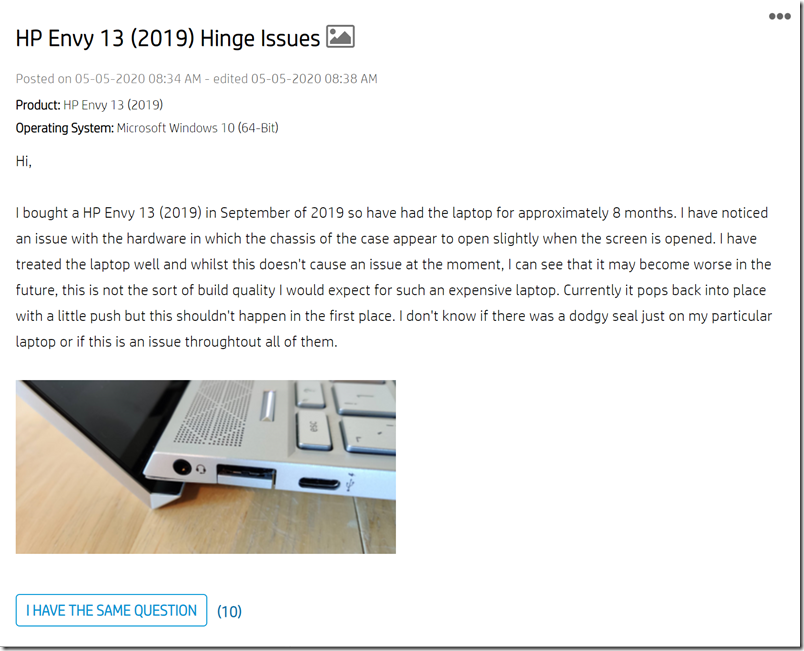

I love technology that endures and one of my most treasured gadgets is a pair of Sennheiser HD600 headphones. Mine are not quite that old, but this is a model that was first released in 1997 and remains on sale; they sound fantastic especially with a high quality headphone amplifier, and I use them as a kind of reference for other headphones. The ear cushions and headband perished on mine and I bought the official spares at an unreasonable price because I so much wanted to keep them going.

Still on sale today: Sennheiser HD600

They are as good as ever; but it happens that this year I have reviewed a large number of headsets and earbuds some of which I also like very much. First though, a few observations.

Passive headphones like my HD600s are in decline. I call them passive because they have no built-in electronics other than the speaker drivers. They can only work when wired to the output from an amplifier. Wires can be a nuisance; and as wireless options become cheaper, better and more abundant, wired is in decline (though it will never go away completely).

Once you go wireless, something else happens. The signal the headset receives over Bluetooth or wi-fi is a digital one. Packed into the receiving electronics is not just a pair of speaker drivers, but also a DAC (Digital to Audio Converter) and an amplifier. Further, the amplifier can be optimized for the drivers. The output can be equalized to compensate for any deficiencies in the drivers or the acoustics formed by their case and fit. This is an active configuration and has obvious advantages, getting the best possible performance from the driver and also enabling smart features like active noise cancellation (if you add microphones into the mix). It should not be surprising that the better wireless devices sound very good. I have also noticed that headsets which offer both wired and wireless modes often sound better wireless, despite the theoretical advantages of a wired connection.

Another significant development is that off-the-shelf chipsets for wireless audio have got better. The leader in this is Qualcomm whose Bluetooth audio chipsets are packed with advanced features. SoCs like the QCC 3506 include adaptive active noise cancellation, 24-bit 96Khz audio, low power consumption, voice assistant support, fast pairing, built in DSP (Digital Signal Processing), and of course programmability for custom features.

It is because of this that there are now numerous budget true wireless earbuds and headsets from brands you might not have heard of, with these exact features.

The importance of the fit

A few years ago I attended a press event at CES in Las Vegas. Shure was exhibiting there and I got to try a pair of its premium IEMs (In-ear monitors). Until then I had been convinced that earbuds could never be as good as headphones; but what I heard that day changed my mind. The audio amazed me, sounding full, rich, spacious, detailed and realistic. I thought for a moment about it and realised I should not be surprised. IEMs are designed to be fitted directly to your ears; why should they not be of the highest quality? The best ones, like those at CES, have multiple drivers.

There is something else though. Getting the best sound from IEMs requires getting an excellent fit since they are generally designed to sealed into your ears; the once with expandable foam ear seals are perhaps the best for achieving this. If they are badly fitted then you will hear sound that is tinny or bass-light.

This means that it is worth taking extreme care with the selection of the right ear seals or ear sleeves, as they are sometimes called. Most earbuds come with a few sizes to accommodate different ear sizes. If the sound changes dramatically when you press the earbuds in slightly, they are not fitted right.

Something else regarding Shure, that I did not realise until recently, is that all its earbuds are designed to have a cable or (in the case of the wireless range) clip that fits behind your ears. The reason for this is that it makes it easier to get a good fit when the cable is not constantly pulling the IEMs out of position. Check out this video for details.

Earbuds that do not fall out

It is a common problem. You fit your earbuds and then go for a run or to the gym. With all that movement, one or both of the earbuds falls out. This can be a serious problem with wireless models. If you lose your earbud in the long grass or on a busy street; you might never find it again, or someone might trample on it.

There are a few solutions. The Shure approach makes it unlikely that the device will fall out. Another idea is to have a neckband that connects the two earbuds and hangs at the back of your head. I quite like this approach, since manufacturers can sneak some batteries into the neckband that give a longer play time than is typical with true wireless. It does not stop an earbud falling out, but it does mean you are less likely to lose it. Some people I’ve chatted to though feel it is the worst of both worlds, the battery and pairing issues of Bluetooth with the inconvenience or ugliness of wires. Up to you.

Airloop Snap 3-in-one

One brand, Airloop, claims to solve this by offering 3-in-one earbuds that can be used true wireless, or with a neckband, or with a lightweight “sports band” that has no batteries but does give a bit of security. The idea was good enough to raise hundreds of thousands in crowdfunding on Indiegogo and Kickstarter; they are nice devices but did not quite make my favourites list as I found the devices a little bulky for comfort, the firmware a little buggy, and the sound not quite what it should be at the price.

Don’t fret about the codec

One last remark before we get to my list of favourites. You will find a few variations when it comes to wireless standards and codecs. Headsets used for gaming generally use USB dongles for low latency. Other headsets generally use Bluetooth and support various codecs. The minimum today is SBC (low-complexity subband codec) which is part of A2DP (Advanced Audio Distribution Profile). Apple devices support AAC. Qualcomm’s aptX has advantages over SBC in compression, higher bitrate and lower latency. Sony has LDAC which supports higher resolutions.

In my experience though, the codec support is relatively unimportant to the sound quality. Yes, the better codecs like aptX or LDAC are superior to SBC; but compared to other factors like the number and quality of the drivers, the ease of getting a good fit (see above), and the quality of the electronics in other respects, the codec is less important. “As you may have noticed, it’s difficult to tell the difference between SBC and aptX by ear”, observes this article.

You can bypass these concerns by going wired; but when you consider the benefits of active amplification as well as the convenience of wireless, it is not a simple decision.

See part two (coming soon) for some of the headsets I have enjoyed in 2020.