Microsoft has announced an agreement to acquire GitHub for $7.5 billion (in Microsoft stock). Nat Friedman, formerly CEO of Xamarin, will become GitHub’s CEO, and GitHub will continue to run somewhat independently. A few comments.

Background: GitHub is a cloud-based source code repository based on Git, a distributed version control system created by Linus Torvalds. It is free to use for public, open source projects but charges a fee (from 7$ to $21 per user per month) for private repositories.

First, why? This one is easy. Microsoft is a big customer of GitHub. Microsoft used to have its own hosting service for open source software called CodePlex but abandoned it in favour of GitHub, formally closing CodePlex in March 2017:

Over the years, we’ve seen a lot of amazing options come and go but at this point, GitHub is the de facto place for open source sharing and most open source projects have migrated there. We migrated too.

said Brian Harry.

Microsoft also uses GitHub for its documentation, and this has turned out to be a big improvement on its old documentation sites.

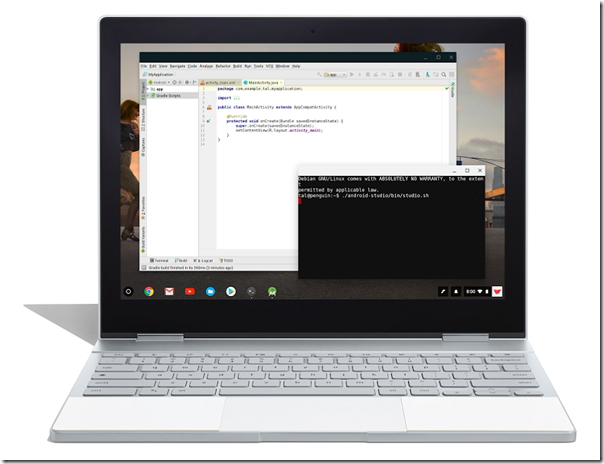

Note also that Microsoft has many important open source projects of its own, including much of its developer platform (.NET Core, ASP.NET Core and Entity Framework Core). Many of its projects are overseen by the .NET Foundation. Other notable open source, Github-hosted projects include Visual Studio Code, a programmer’s editor that has won many friends, and TypeScript, a typed superset of JavaScript that compiles to standard JavaScript code.

When big companies become highly dependent on the services of another company they may become anxious about it. What if the other company were taken over by a competitor? What if it were to run into trouble, or to change in ways that cause problems? Acquisition is an easy solution.

In the case of GitHub, there was reason to be anxious since it appears not to be profitable – unsurprising given the large number of free accounts.

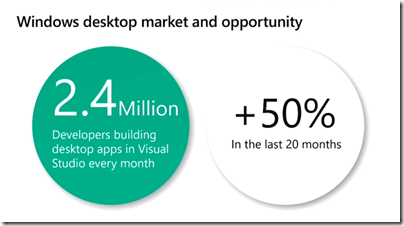

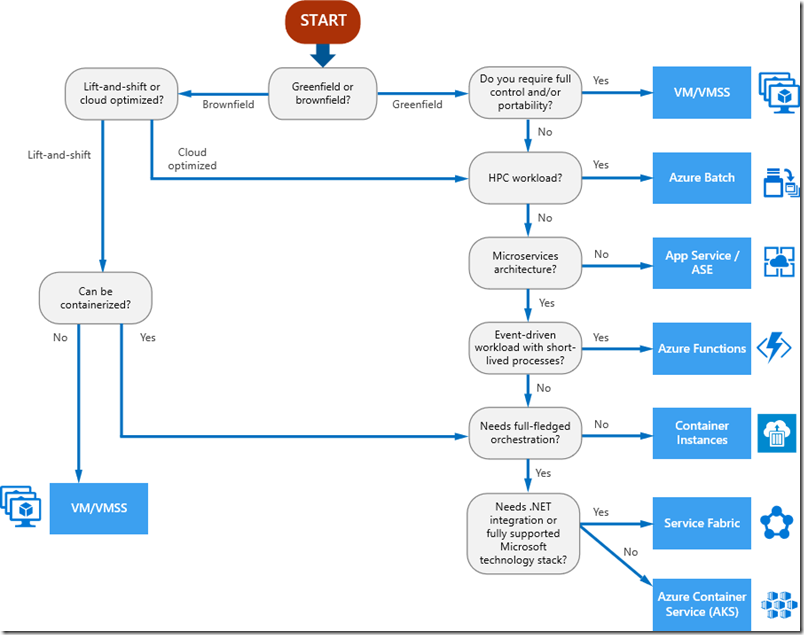

Second, Microsoft is always pitching to developers, trying to attract them to its platform and especially Azure services. It has a difficult task because it is the Windows company and the Windows platform overall is in decline, versus Linux on servers and Android/iOS on mobile. Therefore it is striving to become a cross-platform company, and with considerable success. I discuss this at some length in this piece. Note that there is a huge amount of Linux on Azure, including “more than 40%” of the virtual machines. More than 50%? Maybe.

If Microsoft can keep GitHub working as well as before, or even improve it, it will do a lot to win the confidence of developers who are currently outside the Microsoft platform ecosystem.

Will GitHub get worse?

The tricky question: under Microsoft, will GitHub get worse? The company’s track record with acquisitions is spotty, ranging from utter disasters (Nokia, Danger) to doubtful (Skype), to moderately successful so far (LinkedIn, Xamarin).

Under the current leadership, I doubt anything bad will happen to GitHub. I’d guess it will migrate some infrastructure to Azure (GitHub runs mainly from its own datacentres as I understand it) but there is no need to re-engineer the platform to run on Windows.

Some businesses will be uncomfortable hosting their valuable source code with Microsoft. That is understandable, in the same way that I hear of retailers reluctant to use Amazon Web Services (since it is a platform owned by a competitor), but it is a low risk. Others have long-standing mistrust of Microsoft and will want to migrate away from GitHub because of this.

Personally I think it is right to be wary of any giant global corporation, and dislike the huge and weakly regulated influence they have on our lives. I doubt that Microsoft is any worse than its peers in terms of trustworthiness but of course this is open to debate.

Another point: with this acquisition, free GitHub hosting for open source projects will be likely to continue. The press release says:

GitHub will retain its developer-first ethos and will operate independently to provide an open platform for all developers in all industries. Developers will continue to be able to use the programming languages, tools and operating systems of their choice for their projects — and will still be able to deploy their code to any operating system, any cloud and any device.

It is of course in Microsoft’s interests to make this work and the success of Visual Studio Code and TypeScript (which also come from the developer side of the company) shows that it can make cross-platform projects work. So I am optimistic that GitHub will be OK.

Update: I’ve noticed Sam Newman and Martin Fowler taking this view, a good sign from a people I respect and who are by no means from the usual Microsoft crowd.

Official announcements

Press release: https://news.microsoft.com/?p=406917

Chris Wanstrath’s Blog Post: https://blog.github.com/2018-06-04-github-microsoft/

Satya Nadella’s Blog Post: https://blogs.microsoft.com/?p=52553832