The Cure has released a 3-CD deluxe edition of Mixed Up, originally released as a double album or single CD in November 1990.

Parts of this release have already appeared on vinyl in two limited Records Store Day 2018 releases: Mixed Up, and Torn Down.

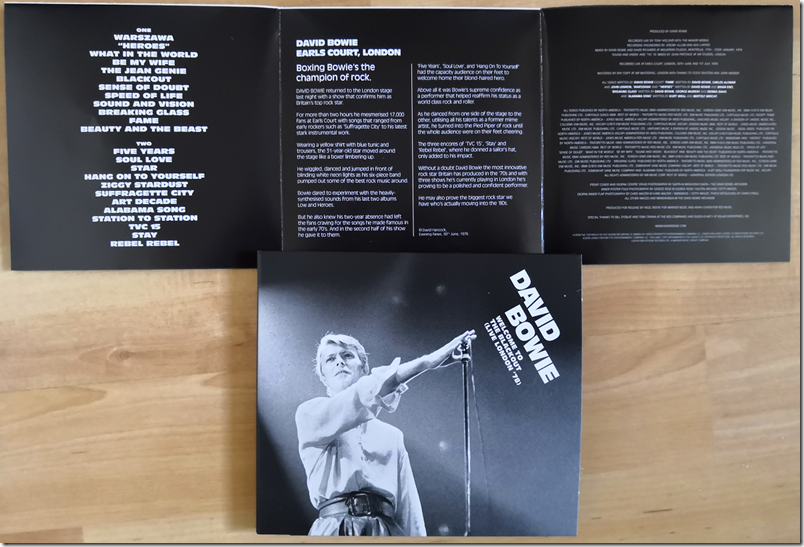

A few words about the CD. Why would anyone buy a CD in this streaming era? It is a waste of money if you just want to listen to the music, but you do get some nice packaging, weird squirly, blocky artwork, photos of the band and of memorabilia from the day, and a 32-page booklet with notes and credits. When physical media has disappeared completely I will miss these things, even though the wretched small size of CD artwork means you have to squint to read the credits.

The idea for Mixed Up came to Robert Smith when he was wondering what came next after the Prayer Tour, the 76 shows which followed the release of the epic album Disintegration in 1989. There were “increased tensions in the band”, according to a quote from Smith in the booklet. “I had to think of something else in the meantime.”

The original thought was to compile the extended mixes made for 12″ singles into an album, since some of these releases were out of print and sought-after by fans.

As he worked on the album though, he moved beyond that initial concept. The early 12″ mixes of songs like Primary, Lovecats and Inbetween Days seemed to him inferior to the more recent releases, so he moved from compiling to reworking existing mixes of earlier songs. In fact, neither Lovecats nor Primary appeared at all on the original Mixed Up. In addition, two tracks on the original Mixed Up (A Forest and The Walk) were re-recorded from scratch as the multi-tracks were missing.

Here is how the original Mixed Up (November 1990) breaks down:

Lullaby (Extended Mix): same as 12″ Fiction FICX 29 (1989)

Close To Me (Closer Mix): Same as 12″ FICSX 36 (1990), different from earlier extended mix on 12″ Fiction FICSX 23 (1985)

Fascination Street (Extended Mix): same as 12″ Elektra 0-66704 (1989, US/Canada only)

The Walk (Everything Mix): new recording for Mixed Up.

Lovesong (Extended Mix): Same as 12″ Fiction FICSX 30 (1989)

A Forest (Tree Mix): New recording for Mixed Up.

Pictures Of You (Extended Dub Mix): same as Fiction Records FICXB 34 where it is called Strange Mix (1990), but different from FICXA 34

Hot Hot Hot!!! (Extended Mix): same as 12″ Fiction FICSX 28 (1988)

Why Can’t I Be You? (Extended Mix):(LP only; omitted from the CD for space reasons): Same as 12″ Fiction Ficsx 25 (1987)

The Caterpillar (Flicker Mix): New extended mix for Mixed Up

In Between Days (Shiver Mix): New extended mix for Mixed Up; different from earlier 12″ Fiction FICSX 22 (1985)

Never Enough (Big Mix): New song recorded for Mixed Up

This made it a curious release, essential for Cure fans thanks to new material included but poor in terms of collecting previously released extended mixes.

What about the new 3CD set. The set breaks down as follows:

CD1: Mixed Up 2018 remaster

This is simply a remaster of the 1990 release. Track release as above, but Why Can’t I Be You still omitted (it is on the next CD in the set)

CD2: Mixed Up Extras

This CD includes (at last) most of the early extended remixes which were not on the original Mixed Up. Tracks:

Let’s Go to Bed (Extended Mix 1982)

Just One Kiss (Extended Mix 1982)

Close to Me (Extended Mix 1985)

Boys Don’t Cry (New Voice Club Mix 1986)

Why Can’t I Be You? (Extended Mix 1987)

A Japanese Dream (12″ Remix 1987)

Pictures of You (Extended Version 1990)

Let’s Go To Bed (Milk Mix 1990)

Just Like Heaven (Dizzy Mix 1990)

Primary (Red Mix 1990)

The Lovecats (TC & Benny Mix 1990)

Inevitably, there are still a few tracks missing. These are Primary (Extended Mix 1981); The Lovecats (Extended Version 1983); and In Between Days (Extended Version 1985). The notes refer to a digital release though I am not sure where or whether they have been released. Smith says of these versions that Primary was “basically a 7″ instrumental cut into the 7″ single mix”, that Lovecats was not really a remix, but rather the original single mix before it was edited down, and that In Between Days was “extended by person or persons unknown” and nothing to do with him.

Of these the only one I care about is Lovecats; I would like to have the full version here.

CD3: Torn Down

This is where Smith lets himself go and makes new mixes of favourites from the Cure’s back catalogue. “Compared to most of the Mixed Up remixes, my versions tend to work with the existing song structure; they’re pretty much the same length and tempo as the original … I found myself happier working within those structural restraints,” he says in the notes. That said, he found elements in the songs that had previously been buried, including the actual sound of heavy rain at the start and end of A Night Like This, which he brought out in the new mix.

Three Imaginary Boys (Help Me Mix)

M (Attack Mix)

The Drowning Man (Bright Birds Mix)

A Strange Day (Drowning Waves Mix)

Just One Kiss (Remember Mix)

Shake Dog Shake (New Blood Mix)

A Night Like This (Hello Goodbye Mix)

Like Cockatoos (Lonely in the Rain Mix)

Plainsong (Edge of the World Mix)

Never Enough (Time to Kill Mix)

From the Edge of the Deep Green Sea (Love in Vain Mix)

Want (Time Mix)

The Last Day of Summer (31st August Mix)

Cut Here (If Only Mix)

Lost (Found Mix)

It’s Over (Whisper Mix)

So how are the new mixes? An interesting way to hear them is to play the original followed by the remix, easy to do if you rip your CDs to a computer or streaming system. You can hear some themes, such as a more techno feel to the new mixes, and that Smith’s vocals are more forward. Three Imaginary Boys, for example, gives you a new perspective on an early song, with the “Can you help me” vocal from the end moved to the beginning of the song, hence the name “Help Me Mix”.

Shake Dog Shake benefits from the extra clarity of a modern mix and sounds more sinister and colourful than the original.

It tends to be lesser-known songs that benefit most. It is difficult to re-approach a magnificent song like Plainsong without making it worse, and in this case it is as expected.

Perhaps then it is better not to listen to them alongside the originals but to enjoy it as a whole. Cure fans will enjoy it even though it is not in any sense ground-breaking.

The complete package

This collections gets a warm welcome from me. I have always enjoyed Mixed Up, and I am delighted now to get treats like the earlier extended mixes of Close To Me. Just One Kiss, and the other extended mix of Pictures of You, which to me are the definitive versions.

The sound quality is excellent, and kudos to mastering engineer Tim Young for showing some restraint in mastering so that these songs are not wrecked by excessively LOUD mastering.