Gartner has published a paper and Magic Quadrant on Mobile App Development Platforms (MDAPs), which you can read for free thanks to Progress, pleased to be named as a “Visionary”, and probably from other sources.

According to Gartner, an MDAP has three key characteristics:

- Cross-platform front-end development tools

- Back-end services that can be used by diverse clients, not just the vendor’s proprietary tools.

- Flexibility to support public and internal deployments

Five vendors ranked in the sought-after “Leaders” category. These are:

- Kony, which offers Kony Visualizer for building clients, Kony Fabric for back-end services, and Kony Nitro Engine, a kind of cross-platform runtime based on Apache Cordova .

- Mendix, which has visual development and modeling tools and multi-cloud, containerised deployment of back-end services

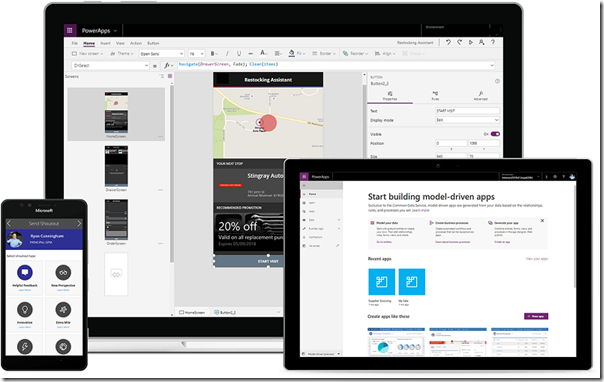

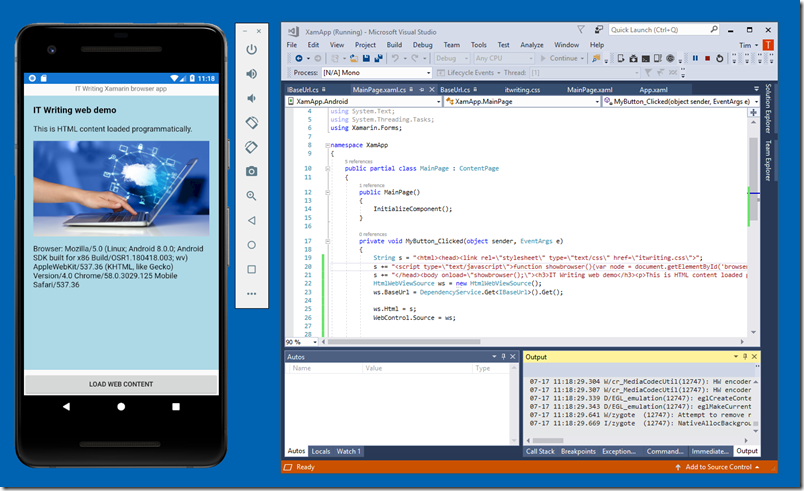

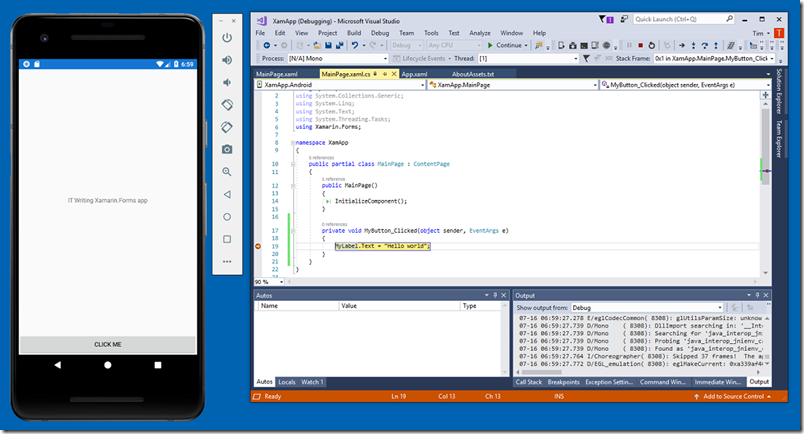

- Microsoft, which has Xamarin cross-platform development, Azure cloud services, and PowerApps for low-code development

- Oracle, which has Oracle Mobile Cloud Enterprise including JavaScript Extension Toolkit and deployment via Apache Cordova

- Outsystems, a low-code platform which has the Silk UI Framework and a visual modeling language, and hybrid deployment via Apache Cordova

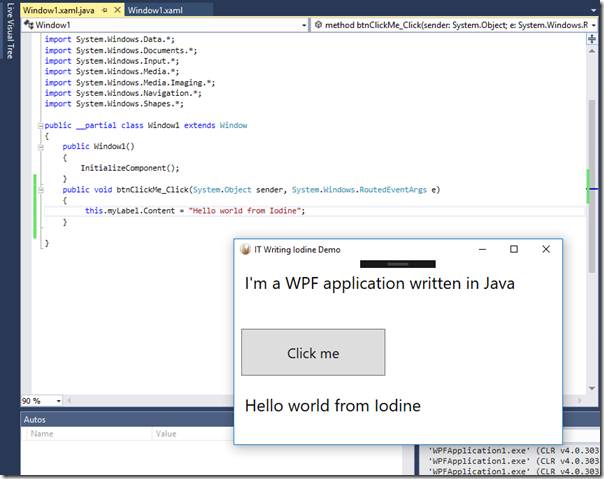

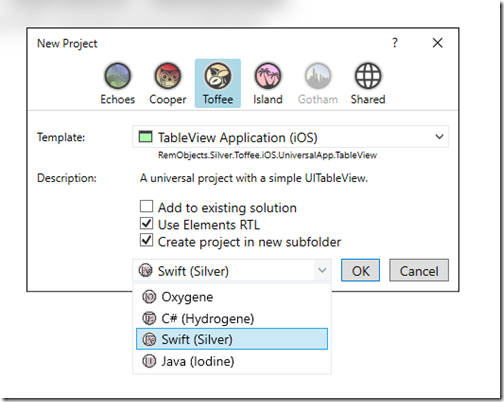

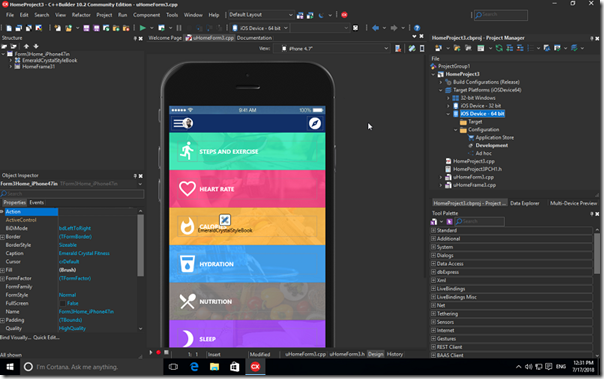

Of course there are plenty of other vendors covered in the report. Further, because this is about end-to-end platforms, some strong cross-platform development tools do not feature at all.

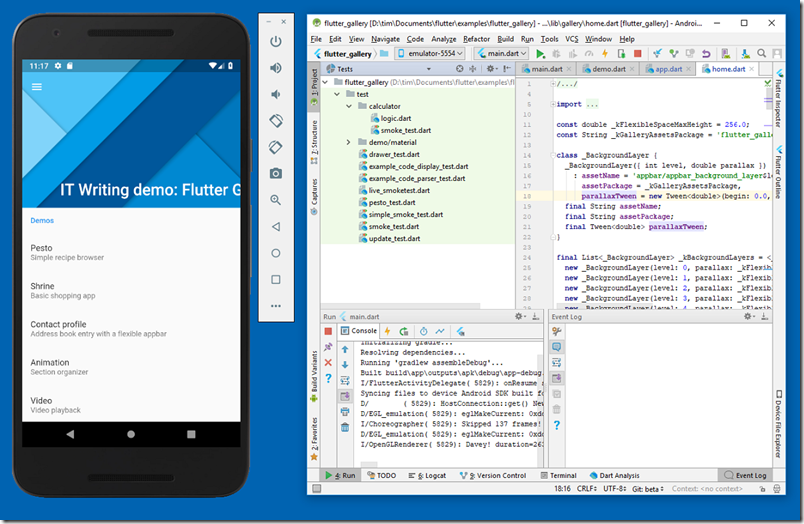

A few observations. One is the prominence of Apache Cordova in these platforms. Personally I have lost enthusiasm for Cordova, now that there are several other options (such as Xamarin or Flutter) for building native code apps, which I feel deliver a better user experience, other things being equal (which they never are).

With regard to Microsoft, Gartner notes the disconnect between PowerApps and Xamarin, different approaches to application development which have little in common other than that both can be used with Azure back-end services.

I found the report helpful for its insight into which MDAP vendors are successfully pitching their platform to enterprise customers. What it lacks is much sense of which platforms offer the best developer experience, or the best technical capability when it comes to solving those unexpected problems that inevitably crop up in the middle of your development effort and take a disproportionate amount of time and effort to solve.