A piece by Rand Fishkin tells me what I already knew: that Google has a de facto monopoly in search, and that organic search (meaning clicking on a result from a search engine that is not an ad) is in decline, especially on mobile.

According to Fishkin, using data from digital intelligence firm Jumpshot, Google properties deliver 96.1% of all search in the EU and 93.4% of all US searches. “Google properties” include Google, Google Images, Youtube, and Google Maps.

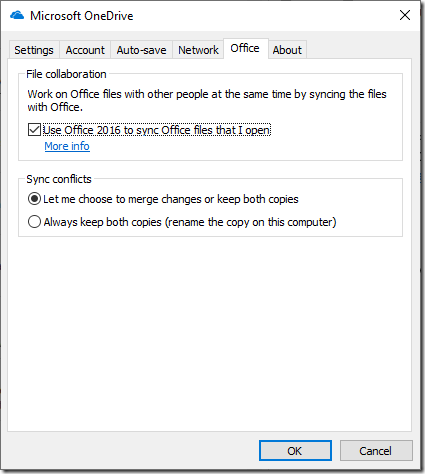

To the extent that this shows high satisfaction with Google’s service, this is a credit to the company. We should also look carefully though at the outcome of those searches. In the latest figures available (Jan-Sept 2018) they break down as follows (EU figures):

- Mobile: 36.7% organic, 8.8% paid, 54.4% no-click

- Desktop: 63.6% organic, 6.4% paid, 30% no-click

On mobile, the proportion of paid clicks has more than doubled since 2016. On the desktop, it has gone up by over 40%.

A no-click search is one where the search engine delivers the result without any click-through to another site. Users like this in that it saves a tap, and more important, spares them the ads, login-in pleas, and navigation challenges that a third-party site may present.

There is a benefit to users therefore, but there are also costs. The user never leaves Google, there is no opportunity for a third-party site to build a relationship or even sell a click on one of its own ads. It also puts Google in control of information which has huge political and commercial implications, irrespective of whether it is AI or Google’s own policies that determine what users see.

My guess is that the commercial reality is that organic search has declined even more than the figures suggest. Not all searches signal a buying intent. These searches are less valuable to advertisers and therefore there are fewer paid ads. On the other hand, searches that do indicate a buying intent (“business insurance”, “IT support”, “flight to New York”) are highly valued and attract more paid-for advertising. So you can expect organic search to me more successful on searches that have less commercial value.

In the early days of the internet the idea that sites would have to pay to get visitors was not foreseen. Of course it is still possible to build traffic without paying a Google tax, via social media links or simply by hosting amazing content that users want to see in full detail, but it is increasingly challenging.

There must be some sort of economic law that says entities that can choose whether to give something away or to charge for it, will eventually charge for it. We all end up paying, since whoever actually provides the goods or services that we want has to recoup the cost of winning our business, including a share to Google.

Around six years ago I wrote a piece called Reflecting on Google’s power: a case for regulation? Since then, the case for regulation has grown, but the prospect of it has diminished, since the international influence and lobbying power of the company has also grown.