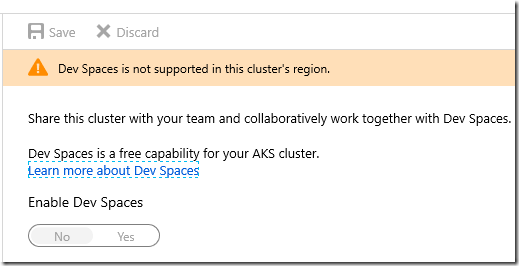

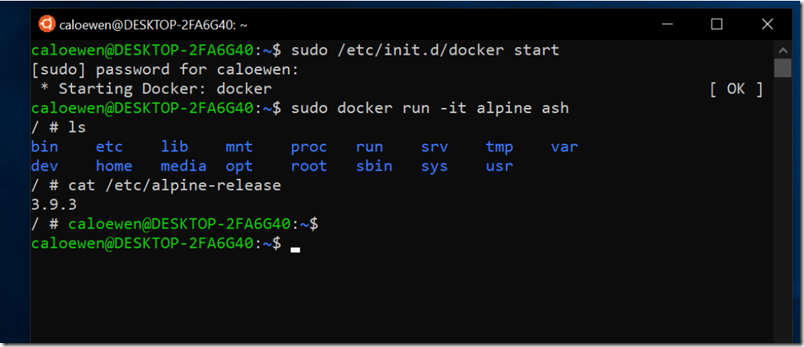

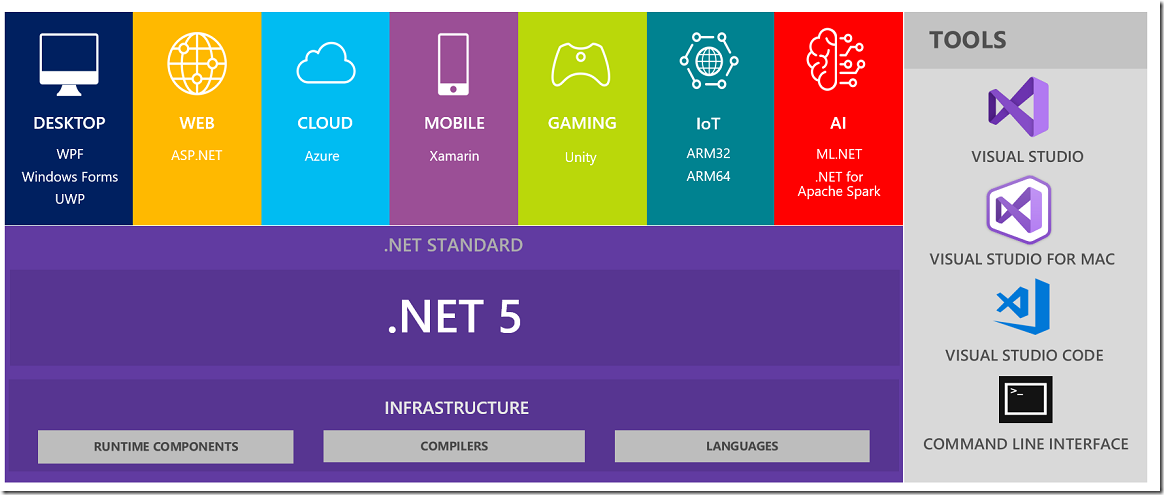

I like to try new technology when I can so following the Build conference I decided to deploy a Hello World app to Azure Kubernetes Service (AKS). I made a one-node AKS cluster in no time. I built a .NET Core app in Visual Studio deployed to a Linux Docker container, no problem. I pushed the container into ACR (Azure Container Registry) though it turns out I did not really need to do that. The tricky bit is getting the container deployed to the AKS cluster. There is a thing called Dev Spaces but it does not work in UK South:

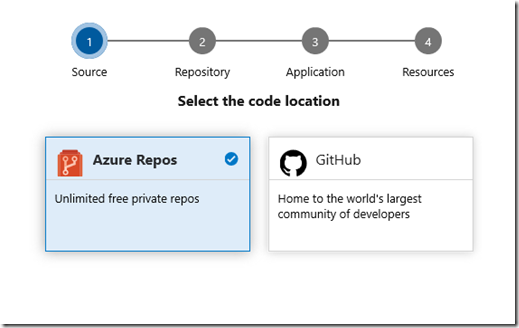

I was contemplating the necessity of building a Helm chart when I tried a thing called Deployment Center (Preview) in the Azure portal.

Click Add Project and it builds a pipeline in Azure DevOps for you.

It worked but the pipeline failed when building the container.

COPY failed: stat /var/lib/docker/tmp/docker-builder088029891/AKS-Example/AKS-Example.csproj: no such file or directory

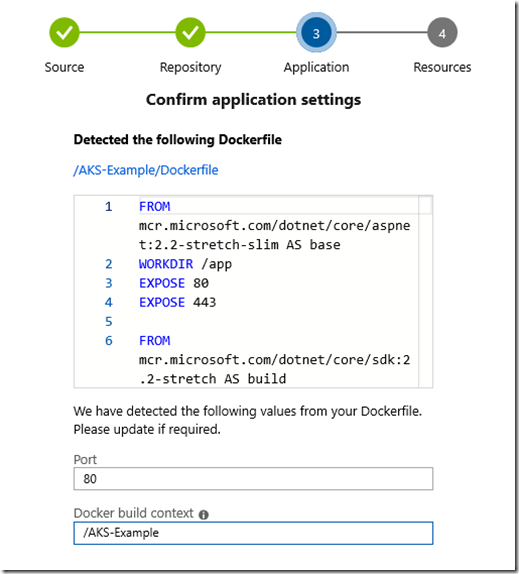

I spent some time puzzling over this error. You can view the exact logs of the build failure and I worked out that it is executing the Dockerfile steps:

COPY [“AKS-Example/AKS-Example.csproj”, “AKS-Example/”]

RUN dotnet restore “AKS-Example/AKS-Example.csproj”

COPY . .

This is failing because there the code in my repository is not nested like that. I eventually fixed it by amending the lines to:

COPY [“AKS-Example.csproj”, “AKS-Example/”]

RUN dotnet restore “AKS-Example/AKS-Example.csproj”COPY . AKS-Example/

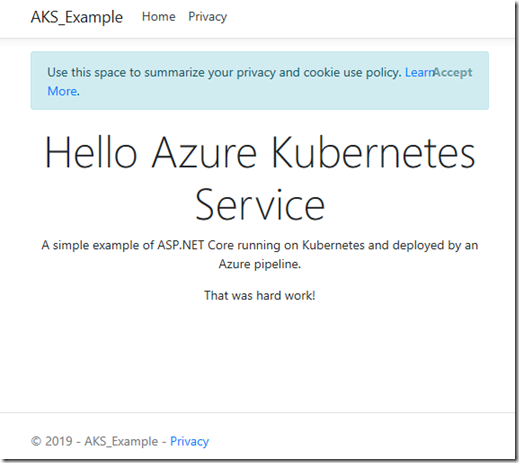

Now the pipeline completed and the container was deployed. I had to look at the Load Balancer Azure had generated for me to find the public IP number, but it worked.

Now the Dockerfile has a different path for local development than when deployed which is annoying. I found I could fix this by changing a step in the Deployment Center wizard:

Where it says /AKS-Example in Docker build context I replaced it with /. Now the build worked with the original Dockerfile.

I also noticed that the Deployment Center (Preview) used a sample YAML template which is linked directly from GitHub and referred confusingly to deploying sampleapp. It worked but felt a bit of a crude solution.

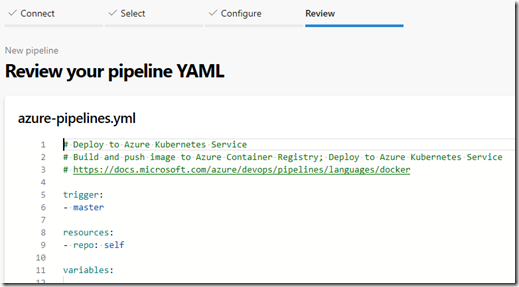

At this point I realised that I was not really using the latest and greatest, which is the pipeline wizard in Azure Devops. So I deleted everything and tried that.

This was great but I could not see an equivalent step to the Docker build context. And indeed, the new build failed with the same COPY failed error I got originally. Luckily I knew the workaround and was up and running in no time.

This different approach also has a slightly different shape than the Deployment Center pipeline, using Environments in Azure DevOps.

Currently therefore I have two questions:

- Why does Azure offer both the Deployment Center (Preview) and the multi-stage pipeline which seem to have overlapping functionality?

- What is the correct way to modify the generated YAML to fix the path issue?

I suppose it would also be good if the path problem were picked up by the wizard in the first place.