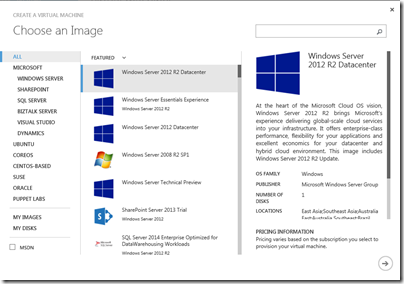

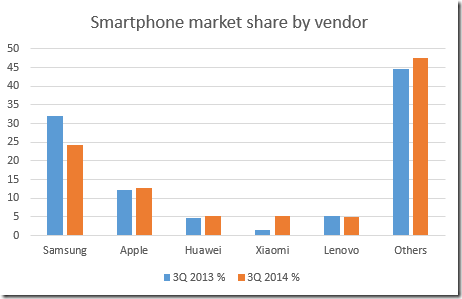

What happened in 2014? One thing I did not predict is that Samsung lost its momentum. Here are Gartner’s figures for global smartphone sales by vendor, for the third quarter of 2014:

Samsung is still huge, of course. But in 2013, Samsung seemed to be in such control of its premium brand that it could shape Android as it wished, rather than being merely an OEM for Google’s operating system. In the enterprise, Samsung KNOX held promise as a way to bring security and manageability to Android, but only in Samsung’s flavour. Today, that seems less likely. Market share is declining, and much of KNOX has been rolled into Android Lollipop. What is going wrong? The difficulty for Samsung is how to differentiate its products sufficiently, to avoid bleeding market share to keenly priced competition from vendors such as Xiaomi and Huawei. This is difficult if you do not control the operating system.

What of the overall mobile OS wars? 2013 brought few surprises: the Apple/Android duopoly continued, Blackberry further diminished its share, and Windows Phone struggles on, though it was not looking good for Microsoft’s OS as 2013 closed; the Nokia acquisition may have been fumbled.

All change at Microsoft

That brings me to Microsoft, a company I watch closely. 2014 saw Satya Nadella appointed as CEO and several strategic changes, though the extent to which Nadella introduced those changes is uncertain. What changes?

Office is going truly cross-platform, with first-class support for iOS and Android. I covered this recently on the Register; the summary is that there will be mobile versions of Office for iOS, Android and Windows (this last a Store app) with similar features, and that more and more of the functionality of desktop Office will turn up in the mobile versions. I learned from my interview with Technical Product Manager Kaberi Chowdhury that ODF (Open Document) support is planned, as is some level of programmability.

The plans for Office are a clue to the company’s wider strategy, which is focused on cloud and server. Key products include Office 365, Windows Azure, Active Directory (and Azure Active Directory), SQL Server, SharePoint, and System Center as a management tool for hybrid cloud.

The Windows client strategy is to bring back users who disliked Windows 8 with a renewed focus on the desktop in the forthcoming Windows 10, while retaining the Store app model for apps that are secure, touch-friendly, and easily deployed. It is still not clear what Windows 10 phones and tablets will look like, but we can expect convergence; no more Windows RT, but perhaps tablets running Windows Phone OS that are in effect the next generation of Windows RT without a desktop personality.

The company will also hedge its bets with full app support for Office and its cloud services on iOS and Android, and in doing so will make its Windows mobile offerings less compelling.

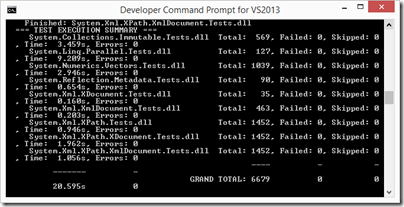

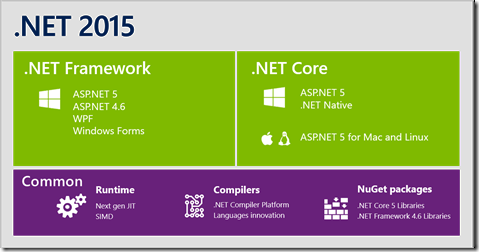

Microsoft’s developer tools are changing in line with this strategy. The next generation of .NET is open source and cross-platform on the server side, for Windows, Mac and Linux. Xamarin plugs the gap for .NET on iOS and Android, while Microsoft is also adding native support (not .NET based) for cross-platform mobile in the next Visual Studio.

These are big changes to the developer stack, and Microsoft is forking .NET between the continuing Windows-only .NET Framework, and the new cross-platform .NET Core. Developers have many questions about this; see this interview on the Register for what I could glean about the current plans. Watch our for the Build conference at the end of April when the company will attempt to put it all together into a coherent whole for developers targeting either Windows 10, or cloud apps, or cloud services with cross-platform mobile clients.

This entire strategy is a logical progression from the company’s failure in mobile. Can it now succeed with client apps running on platforms controlled by its competitors? Alternatively, is there hope that Windows 10 can keep businesses hooked on Windows clients? Maybe 2015 will bring some answers, though with Windows 10 not expected until towards the end of the year there will be a long wait while iOS, Android and even Chrome OS (the operating system of Chromebook) continue to build.

A side effect is that C# now has a better chance of building a cross-platform user base, rather than being a Windows language. This has already happened in game development, thanks to the use of Mono and C# in the popular Unity game engine. Could it also happen with ASP.NET, deployed to Linux servers, now that this will be officially supported? Or is there little room for it alongside Java, PHP, Ruby, Node.js and the rest?

The puzzle with Microsoft is that there is still too much mediocrity and complacency that damages the company’s offerings. How can it expect to succeed in the crowded wearable market with a band that is uncomfortable to wear? There is still an attitude in some parts of the company that the world will be happy to put up with problems that might be fixed in a future version after some long interval. Then again, the Azure team is doing great things and Windows server continues to impress. Win or lose, there will be plenty of Microsoft news this year.

A theme for 2015: cloud optimization

Late last year I attended Amazon’s re:Invent conference in Las Vegas; I wrote this up here. The key announcement for me was Amazon Aurora, a MySQL clone, not so much because of its merits as a cloud database server, but more because it represents a new breed of applications that are designed for the cloud. If you design database storage with the knowledge that it will only ever run on a huge cloud-scale infrastructure, you can make optimizations that cannot be replicated on smaller systems. I tried to summarize what this means in another Register piece here. The fact that this type of technology can be rented by any of us at commodity prices increases the advantage of public cloud, despite reservations that many still have concerning security and control. It also poses a challenge for companies like Oracle and Microsoft whose technology is designed for on-premises as well as cloud deployment; they cannot achieve the same advantage unless they fork their products, creating cloud variants that use different architecture.

The Sony hack

The cyber invasion of Sony Pictures in late November was not just another hack; it was a comprehensive takedown in which (as far as I can tell) the company’s entire IT systems were entirely compromised and significantly damaged.

According to this report:

Mountains of documents had been stolen, internal data centers had been wiped clean, and 75 percent of the servers had been destroyed.

Most IT admins worry about disaster recovery (what to do after catastrophic system failure such as a fire in your data center) as well as about security (what to do if hackers gain access to sensitive information). In this case, both seemed to happen simultaneously. Further, as producing movies is in effect a digital business, the business suffered loss of some of its actual products, such as the unreleased “Annie”.

The incident is fascinating in itself, especially as we do not know the identity of the hackers or their purpose, but what interests me more are the implications.

Specifically, how many companies are equally at risk? It seems clear that Sony’s security was towards the weak end of the scale, but there is plenty of weak security out there, especially but not exclusively in smaller businesses.

With the outcome of the Sony hack so spectacular, it is likely that there will be similar efforts in 2015, as well as many businesses looking nervously at their own practices and wondering what they can do to protect themselves.

Cloud may be part of the answer though even if the cloud provider does security right, that is no guarantee that their customers do the same.

Looking back on looking back

Here is what I wrote a year or so ago, Reflecting on 2013- the year of not the PC, no privacy, and the Internet of Things. Most of it still applies. I have not achieved any of the three goals I set for myself though. Maybe this year…